Our BigQuery connector gives you easy access to run SQL queries across all of the crawl data in your account. We’ve structured the data to be flexible, and have listed some easy SQL queries on this page to allow you to quickly find the data you need.

Data format

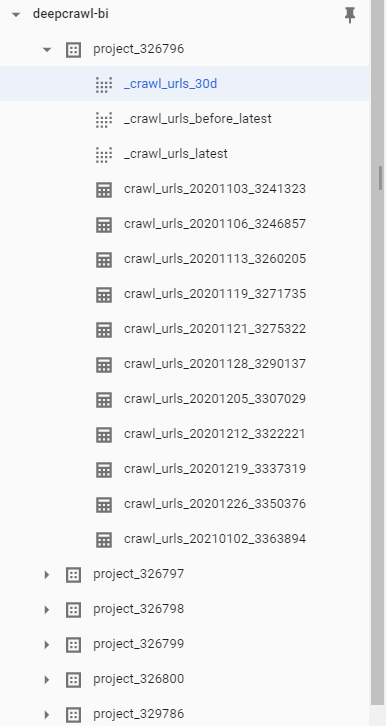

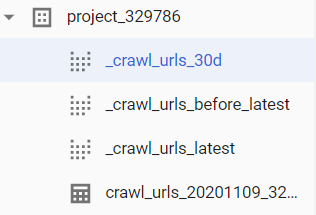

Each Lumar project is a BigQuery dataset which uses its ID as its name (such as project_123456), each crawl is a table inside this dataset – each table is named crawl_urls_[DATE]-[crawl ID] such as ‘crawl_urls_20201225_654321‘.

The crawl data itself contains all of the data that we know about each URL in the crawl.

Permissions

To ensure that only the right people have access to the right data, we share access with a specific group of users on a per-project basis. This way, you can share a given project with a single client, and no other clients will be able to access that data.

Finding your Project ID

You can find your project ID in the URL of a crawl report – the format of our URLs is https://app.lumar.io/accounts/:account_id/projects/:project_id/crawls/:crawl_id, so in the URL https://app.lumar.io/accounts/123/projects/456/crawls/789, the project ID is 456

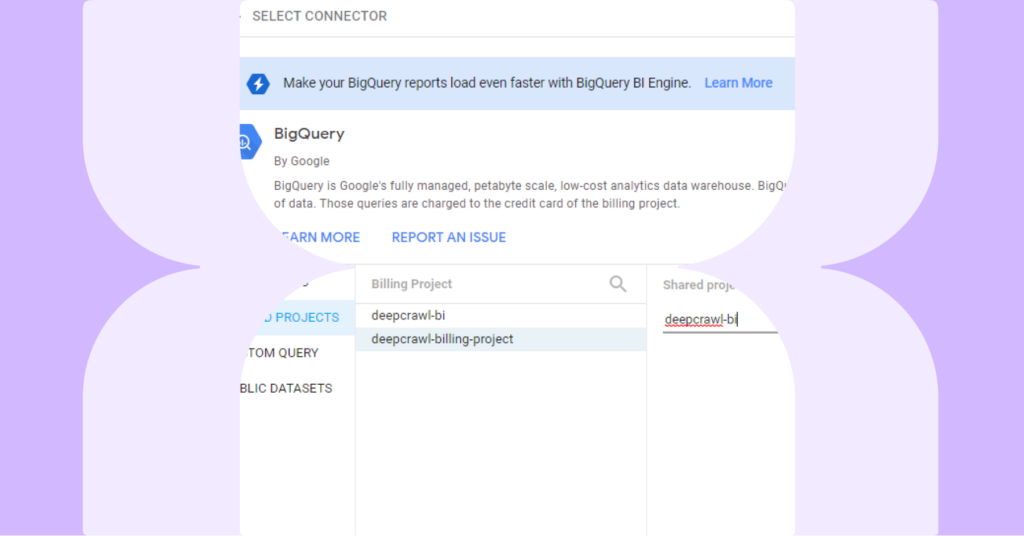

Data Projects & Billing Projects

Unless you request otherwise, your data will be saved into the BigQuery project “deepcrawl-bi” and shared with you. While you will have the permission to access this data, this project does not come with any querying capabilities. The querying capability will come from a separate Billing Project that will be provided to you (this is specific to your organization, so you should not share it). Therefore, when using data in the deepcrawl-bi project with any tool, you must specify the separate Billing project.

Lumar SQL Cheatsheet

We’ve structured our data in a way that will allow you to get all of the insights that you need. Rather than manually selecting a given crawl, you can use the below queries to logically choose the table you want to work with (such as “latest crawl”, “all crawls from the last 30 days”). The connector comes with some default data views that internally use these queries, so in some cases, it is easier to use those.

Default views

The following views are available within all datasets in BigQuery:

- Latest Crawl: _crawl_urls_latest

- Previous Crawl: _crawl_urls_before_latest

- All data in last 30 days: _crawl_urls_30d

All of the below examples assume that your project ID is 1234

Get all data from the latest crawl

Use the view _crawl_urls_latest or the SQL query:

SELECT * FROM 'deepcrawl-bi.project_1234.crawl_urls_*' WHERE _TABLE_SUFFIX = (SELECT MAX(_TABLE_SUFFIX) from 'deepcrawl-bi.project_1234.crawl_urls_*')

Get all 404 pages from the latest crawl in a given project

SELECT url, page_title, http_status_code, search_console_total_clicks FROM 'deepcrawl-bi.project_1234.crawl_urls_*' WHERE http_status_code = 404 AND _TABLE_SUFFIX = (SELECT MAX(_TABLE_SUFFIX) from 'deepcrawl-bi.project_1234.crawl_urls_*')

Get all data from the previous crawl

Use the view _crawl_urls_before_latest or the SQL query:

SELECT * FROM 'deepcrawl-bi.project_1234.crawl_urls_*' WHERE _TABLE_SUFFIX = (SELECT DISTINCT _TABLE_SUFFIX FROM 'deepcrawl-bi.project_1234.crawl_urls_*' ORDER BY _TABLE_SUFFIX DESC LIMIT 1 OFFSET 1)

Get all data from all crawls in the past 30 days

Use the view _crawl_urls_30d or the SQL query:

SELECT * FROM 'deepcrawl-bi.project_1234.crawl_urls_*' WHERE _TABLE_SUFFIX > CAST(DATETIME_ADD(CURRENT_DATETIME(), INTERVAL -30 DAY) AS STRING)

Get the number of 404 pages for each crawl in the past 30 days

SELECT CAST(crawl_id AS STRING) as crawl_id, CAST(MAX(crawl_datetime) AS TIMESTAMP) as datetime, COUNT(DISTINCT url) as count_urls, FROM 'deepcrawl-bi.project_1234.crawl_urls_*' WHERE http_status_code = 404 AND CAST(crawl_datetime AS TIMESTAMP) > CAST(DATETIME_ADD(CURRENT_DATETIME(), INTERVAL -30 DAY) AS TIMESTAMP) GROUP BY _TABLE_SUFFIX, crawl_id ORDER BY _TABLE_SUFFIX DESC

Find URLs where the page title has changed since the last crawl

SELECT latest.url, latest.page_title 'current_page_title', previous.page_title 'previous_page_title' FROM 'deepcrawl-bi.project_1234._crawl_urls_latest' latest, 'deepcrawl-bi.project_1234._crawl_urls_before_latest' previous WHERE latest.url_digest=previous.url_digest AND latest.page_title != previous.page_title

Find the number of URLs crawled per day for the past 30 days

SELECT COUNT(rownum), SUBSTR(crawl_datetime,0,10) FROM 'deepcrawl-bi.project_1234._crawl_urls_30d'

GROUP BY SUBSTR(crawl_datetime,0,10)

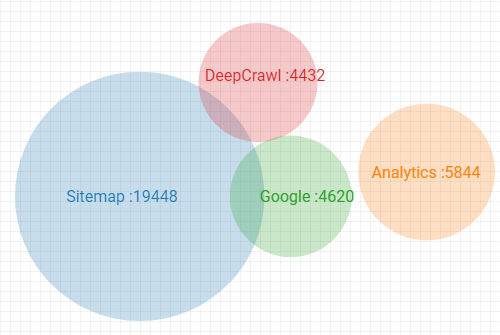

Create a Venn Diagram of where URLs were found (in Data Studio)

SELECT REGEXP_REPLACE(CONCAT(IF(found_in_web_crawl=TRUE,"Lumar, ",""),IF(found_in_sitemap=TRUE,"Sitemap, ",""),IF(found_in_google_analytics=TRUE,"Analytics, ",""),

IF(found_in_log_summary=TRUE,"Google, ",""),IF(found_in_google_search_console=TRUE,"Search Console, ",""),IF(found_in_list=TRUE,"List","")), ',

This will structure data in the format required by the Venn Diagram community visualization.

, "") 'dimension', COUNT(rownum) 'metric' FROM 'deepcrawl-bi.project_1234._crawl_urls_latest' WHERE SAFE_ADD(SAFE_ADD(SAFE_ADD(SAFE_ADD(if(found_in_web_crawl=TRUE,1,0),if(found_in_sitemap=TRUE,1,0)),if(found_in_log_summary=TRUE,1,0)),if(found_in_google_search_console=TRUE,1,0)),if(found_in_list=TRUE,1,0)) > 1 GROUP BY dimension UNION ALL SELECT "Lumar" 'dimension', COUNT(rownum) 'metric' FROM 'deepcrawl-bi.project_1234._crawl_urls_latest' WHERE found_in_web_crawl=true GROUP BY dimension UNION ALL SELECT "Sitemap" 'dimension', COUNT(rownum) 'metric' FROM 'deepcrawl-bi.project_1234._crawl_urls_latest' WHERE found_in_sitemap=true GROUP BY dimension UNION ALL SELECT "Google" 'dimension', COUNT(rownum) 'metric' FROM 'deepcrawl-bi.project_1234._crawl_urls_latest' WHERE found_in_log_summary=true GROUP BY dimension UNION ALL SELECT "Search Console" 'dimension', COUNT(rownum) 'metric' FROM 'deepcrawl-bi.project_1234._crawl_urls_latest' WHERE found_in_google_search_console=true GROUP BY dimension UNION ALL SELECT "List" 'dimension', COUNT(rownum) 'metric' FROM 'deepcrawl-bi.project_1234._crawl_urls_latest' WHERE found_in_list=true GROUP BY dimension UNION ALL SELECT "Analytics" 'dimension', COUNT(rownum) 'metric' FROM 'deepcrawl-bi.project_1234._crawl_urls_latest' WHERE found_in_google_analytics=true GROUP BY dimension

This will structure data in the format required by the Venn Diagram community visualization.