Algorithms of Oppression

[CW: this report includes examples and slides of racism, antisemitism, and racial slurs]

On November 18, 2020, industry experts from across the tech landscape came together to discuss research, trends, and predictions at our DeepCrawl Live summit.

Dr. Safiya Noble’s presentation Algorithms of Oppression was perhaps the most thought-provoking, bringing together around a decade’s worth of research looking at the disparate impact that search engines have on vulnerable populations.

In a fascinating and illuminating 45 minutes, Noble cited clear examples of bias and stereotyping found across Google’s frontpage SERPs, as well as in Google Image Search and Google Maps.

She also put forth a number of opportunities that we – as consumers, and search specialists – can act on in order to help make the future of these technologies a more egalitarian one than it might be if it runs unchecked.

Exploding the myth about algorithms

Noble’s journey was inspired by a simple search on Google back when she was a grad student. She describes in her book on the topic:

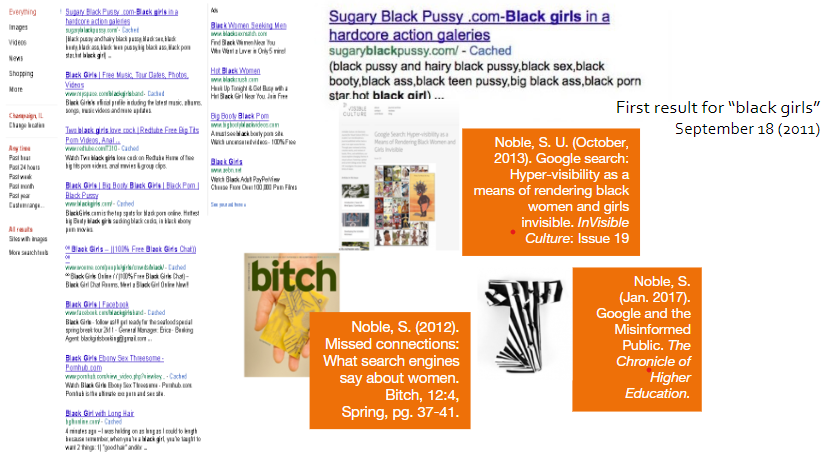

‘Run a Google search for “black girls”—what will you find? “Big Booty” and other sexually explicit terms are likely to come up as top search terms. But, if you type in “white girls,” the results are radically different.

The suggested porn sites and un-moderated discussions about “why black women are so sassy” or “why black women are so angry” presents a disturbing portrait of black womanhood in modern society.’

The bias in the above example is obvious, but in highlighting this and other examples Noble was initially met with pushback and deflection from the technologists she spoke to about it.

‘People would always say to me there’s no way search engines can be racist,’ Noble says. ‘Because search is just computer coding, and computer coding is just math, and math can’t be racist. It was just a very reductive logic and argument.’

Noble’s counter to that was to talk about what it means to be human. We are on an objective level just a collection of cells, yet to suggest we don’t have some subjective thought which steers us is quite wrong.

Noble highlights that AI/algorithms are actually part of an ecosystem made up of tech and humans. Human decisions become decision trees for machine learning.

In the last 10 years, there has been a massive change in the public perception of algorithms, away from the idea of neutral computation into something more human and more flawed. This change has also undoubtedly influenced the growth of trust and safety teams in tech companies across the industry.

Yes, human biases are built into the algorithms we interact with in our increasingly digital lives. And human subjectivity is increasingly shaping how much of these biases are corrected or not.

When Google gets it wrong

Over the years Noble has documented a number of ways racial bias, stereotyping and dehumanization have been evident in Google’s algorithms. Although, often, it is the responses to these issues from the search giant which are more problematic than the initial flaws in the SERPs.

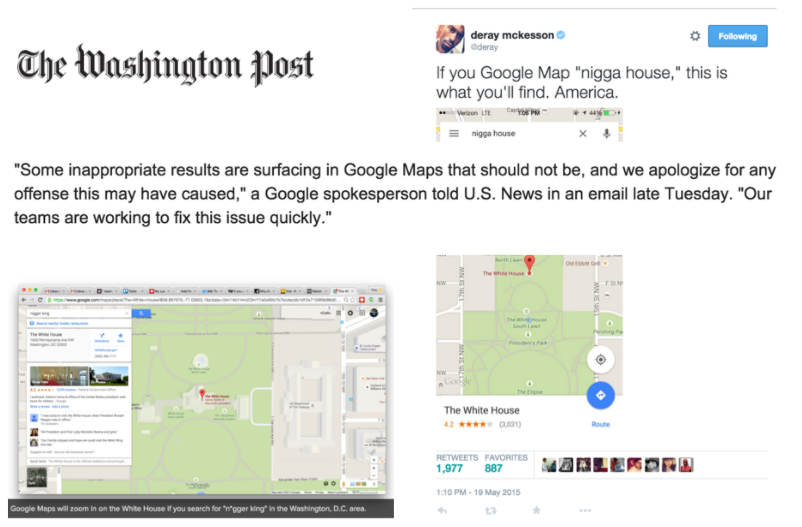

When faced with criticism from Twitter user Derey McKesson that searching for “nigga house” on Google Maps led to The White House (then occupied by the Obama administration and family), Google offered the following:

Some inappropriate results are surfacing in Google Maps that should not be, and we apologize for any offense this may have caused…Our teams are working to fix this issue quickly.

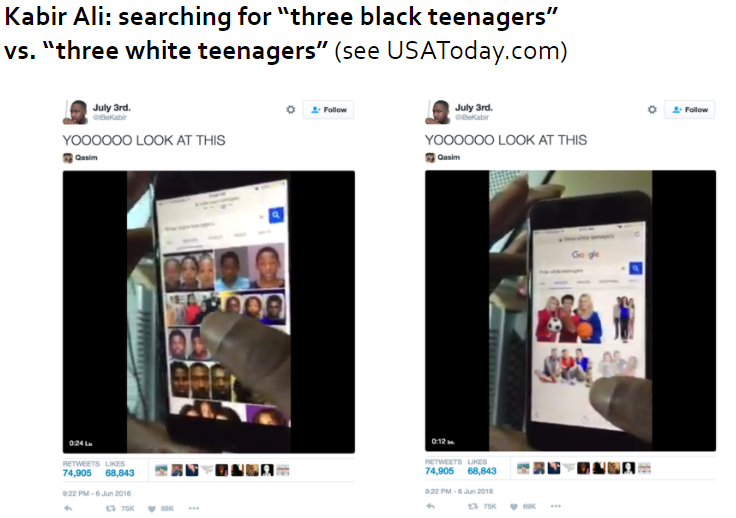

In another instance, Kabir Ali (also posting on Twitter) highlighted that typing “three black teenagers” into Google’s image search resulted in a barrage of mugshots and criminalized males.

This was vastly different when he compared it to the results for “three white teenagers,” which presented a number of perfectly curated stock images of white kids posing and holding footballs.

Again, Google’s response was lacking. They tweaked these particular SERPs manually in order to present an example of a white teenager appearing in court, as well as some tokenistic pictures inserted to break up the flow of black mugshots which had been presented the day before.

The near non-apology in the first instance and the hurried papering over the cracks in the second were rightly criticized, but Noble noticed something else that tied these responses together:

When these things happen they are just framed as a glitch, as a momentary aberration in a system which otherwise works perfectly,’ she says. ‘This is the kind of logic that is deeply embedded in Silicon Valley companies who come to believe their products are just math.

‘This was so interesting to me,’ Noble adds. ‘I just found over and over and over again, that when it came to results that had to do with people of color, oftentimes women, certainly girls, and children of color…these kinds of results were actually more normative than you would imagine. Part of that is because people don’t look to see what the disparate impact is on vulnerable people.’

Here Noble highlights a number of additional problems that compound these racial biases. The first is that Google has a monopoly on the space (86.86% market share as of July 2020) and the second is that it is a commercial space – in that it is more often those who can pay the most who can optimize the content there.

This was certainly the case when we go back to the “black girls” keyphrase and the accompanying SERPs Noble was presented with back in 2011. At that time, 80% of the search results were pornography or hypersexualized content, leaving little room for other content about actual black girls.

‘This opens up the question,’ Noble says. ‘How would people who are vulnerable, people who are refugees, people who are children, people who are poor, people who are disabled, people who are not even on the internet – how would those people ever be able to control their representation if we use only commercial logics?’

The politics of classification

Noble highlights that the logics of classifications have existed for thousands of years. Many of the issues Google faces in terms of how people are classified bear similarities to those librarians and editors faced prior to the proliferation of the internet.

The thing to remember in this digital era of classification is that the way we classify people has always been used to oppress.

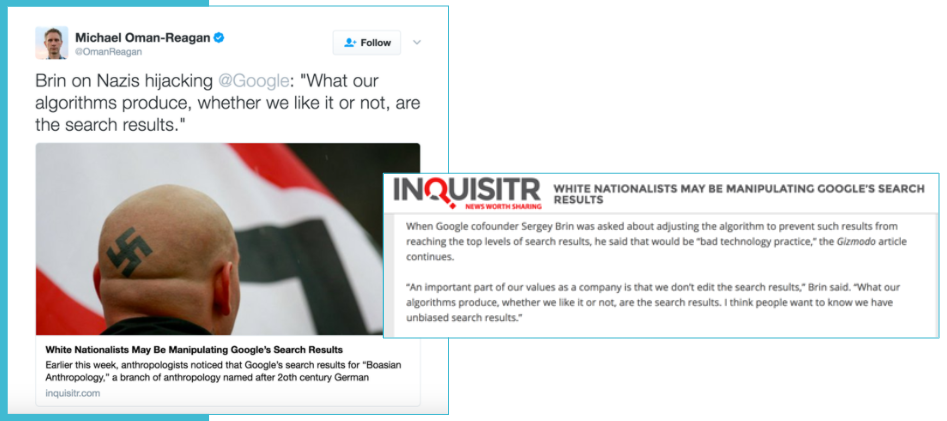

As recently as 2017, news and entertainment site Inquisitr found that white nationalists looked to have been successfully optimizing antisemitic responses to “Boasian Anthropology” and Holocaust denial content related to the question: “Did the Holocaust happen?”

Again, Google responded. And again the response was disappointing, with co-founder Sergey Brin stating: ‘To interfere in that process would be bad technology practice.’

Noble points out that in the European context Brin’s opinion is counter to EU law. Online platforms must moderate anti-semitic hate speech in countries such as France and Germany. But the situation is quite different in the US.

‘There’s a real desire in the United States to stand behind Section 230,’ Noble says. ‘It’s really about tech companies not wanting to manage content. Imagine if you had to really be responsible – we’re talking about a trillion-dollar investment. There’s been a lot of pushback on tech companies being categorized and regulated as media companies.’

As Noble highlights, the sheer number of search engine optimizers, advertisers, and web developers publishing content makes this a massive and complex task for the platforms to control. But it reinforces the need to improve the algorithms at the outset too.

Pushing for change

Algorithms are an ecosystem consisting of humans and tech – and they contain human biases.

Google has a monopoly in search and it is a commercial space. Noble’s research reminds us that as consumers we are not always coming to the search giant to shop; it is also a tool for gathering information, learning about history, research, and for understanding the world.

With this in mind, how people are classified in the SERPs is of massive importance. As a space for representation, we can see time and time again that the algorithm skews in favor of white men and misrepresents people of color – particularly women and girls.

When these issues are made public, the narrative that is pushed is that these are exceptional cases – but when researching key phrases relating to vulnerable communities, Noble has found this more often to be the norm. So where do we go from here?

Noble closes her presentation with five key opportunities for those working in the search sector to take on board as a way to help combat some of this social inequality online:

- The tech (and the data) aren’t neutral – all are imbued with racism, sexism, and class power.

- We must intervene and stop predicting oppression into the future through machine learning.

- We need new narratives from the most disaffected, the artists and the makers to lead.

- More critical responses to AI development: AI will become a human, civil, and sovereign rights issue in the 21st century.

- We need more technology accountability boards: industry, civil society/NGO/activists, government.

We in the industry are in a fantastic position to help shape the future of the technologies we work with so regularly. We have the research, the data, and we have an incredible understanding of the quirks and nuances of both Google’s business practices and its algorithm.

There is no better time than now to take Noble’s research on board and to work towards a better digital space for all.