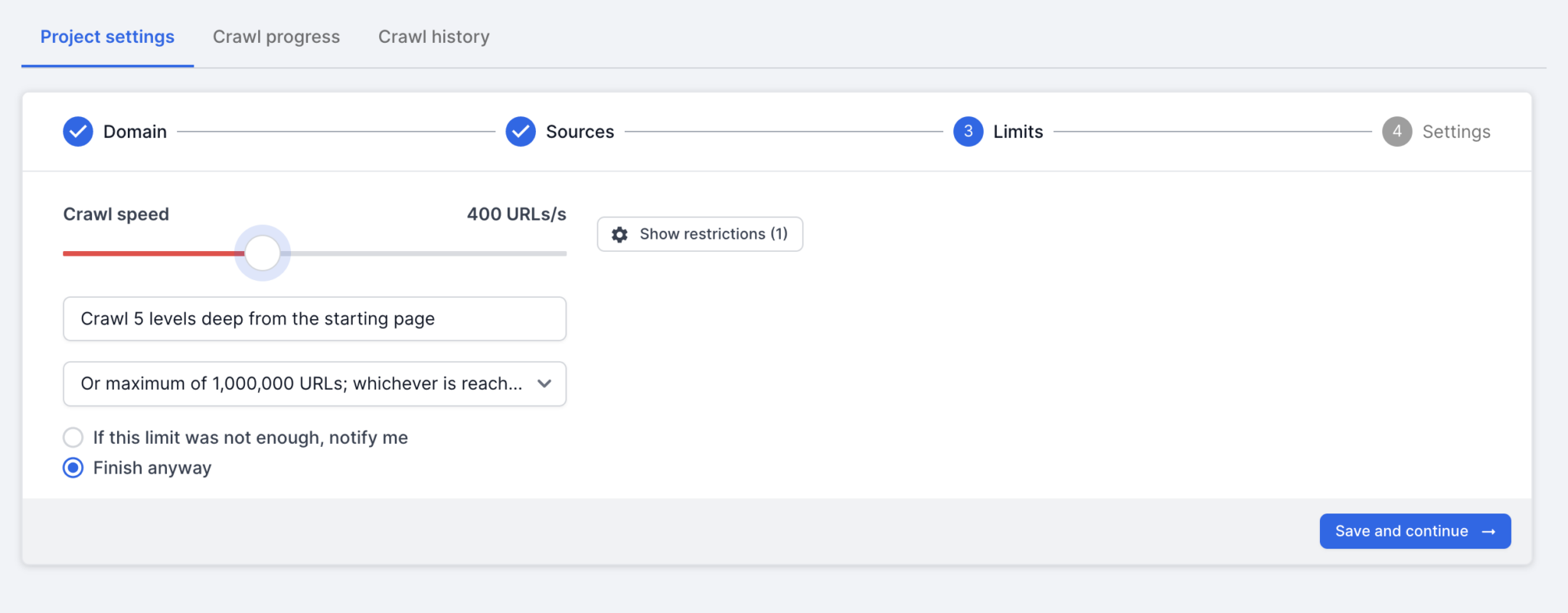

This summer, Deepcrawl (now Lumar) is rolling out the latest version of our market-leading website crawler — now featuring record-breaking crawl speeds of up to 450 URLs per second for non-rendered content and 350 URLs per second for JavaScript-rendered content.

For enterprise websites housing hundreds of thousands (or millions) of pages, the introduction of ultra-fast crawling in Deepcrawl is a major, time-saving boon for SEO, digital marketing, and developer teams.

In addition to adding ultra-fast crawl speed, increased flexibility in data collection is also built into this latest crawler release. The information that can be captured in website crawls has been expanded beyond traditional technical SEO metrics, paving the way for new built-in metrics and reporting options within the Deepcrawl platform. Presently, users have access to 250 built-in reports as well as the ability to design their own custom extractions to collect data on any website element they specify. The added flexibility in data collection allows for new use cases to be developed via Deepcrawl’s crawler, with a recently released GraphQL API providing easy implementation for developers.

Why is sophisticated crawling software important?

Crawling software is a foundational element of SEO and website intelligence platforms. It traverses a website’s pages to collate the raw data required for sophisticated website analytics and serves as the crucial first step in understanding and optimizing a website’s technical health and search performance.

The newest developments in Deepcrawl’s website crawler

Our latest crawler version is the fastest, most scalable, most flexible website crawling system on the market. To sum things up, the enhanced crawler offers:

- Ultra-fast crawl speed: The enhanced crawler leverages best-in-class serverless architecture design to drastically enhance website crawling speeds, saving valuable time for digital teams. In field testing on large-scale enterprise websites, non-rendered pages recorded crawl speeds in excess of 450 URLs/second, while JavaScript-rendered pages reached speeds of 350 URLs/second.

- Alignment with the latest Google Search behaviors: We’re constantly working behind the scenes to ensure our crawl data accurately reflects the latest search engine developments. The new crawler developments at Deepcrawl provide even greater alignment with Google’s search engine behaviors. This ensures users get an accurate, up-to-date picture of how search engines view their websites.

- Improved rendering and parsing: The new crawler takes full advantage of Google’s industry-leading rendering and parsing engine, to ensure rendered pages are viewed in line with search engines’ own capabilities and users can accurately identify rendering issues that may impact their search performance.

- Even more flexible crawling: Our latest developments will allow us to increase the breadth and flexibility of data that Deepcrawl can extract from webpages. Other crawlers hard-code specific queries into their website crawling software, limiting users to extracting only a limited set of data points from their websites. Deepcrawl’s latest crawler version allows for more flexibility, without limits on what types of questions the crawler can ‘answer’ via custom extractions or API usage. (Yes, you should also watch this space in the near future for news on additional built-in reporting options! ???? )