Diagnosing and Solving Connection Errors

Sometimes a website will show a significant number of connection errors every time you run a crawl, despite the page actually working when you check it manually.

The cause of these errors can be one of several different factors including temporary server issues, performance problems caused by the crawl itself, or because the server is deliberately blocking the crawler.

This guide will address instances where DeepCrawl reports a connection error, and will help you to avoid them.

Diagnosing the Issue

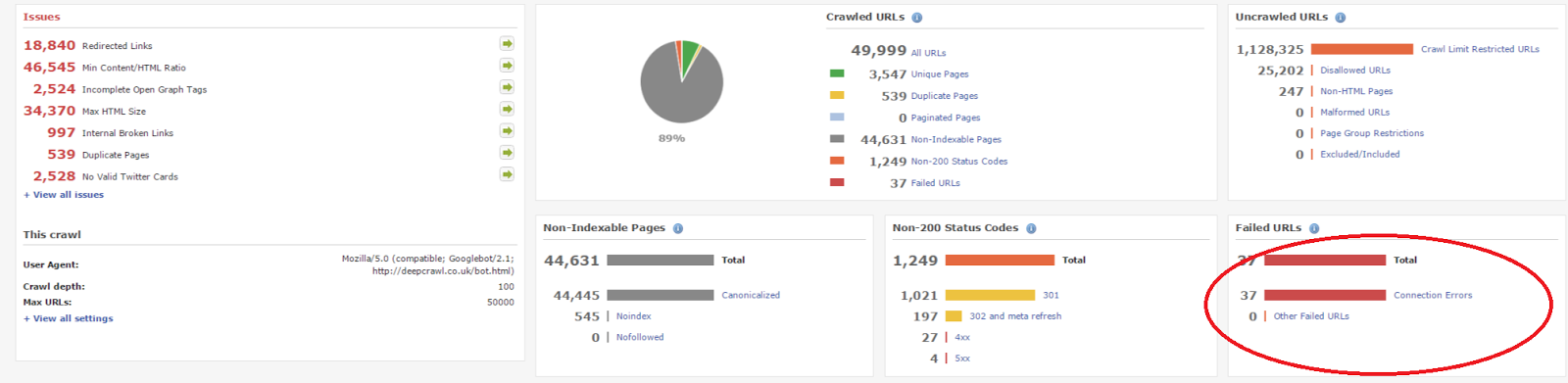

Firstly, you should test a random sample of pages returning connection errors, to establish whether the issue lies with the crawler or the website itself. You can do this within the “Overview” screen in your DeepCrawl report.

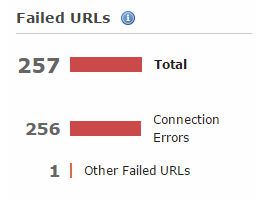

On the right hand side of the Overview screen in your DeepCrawl report, you can see the number of connection errors.

If you view this report, you can see the full list of URLs with connection errors, and open them in your browser to see if they are still failing to respond.

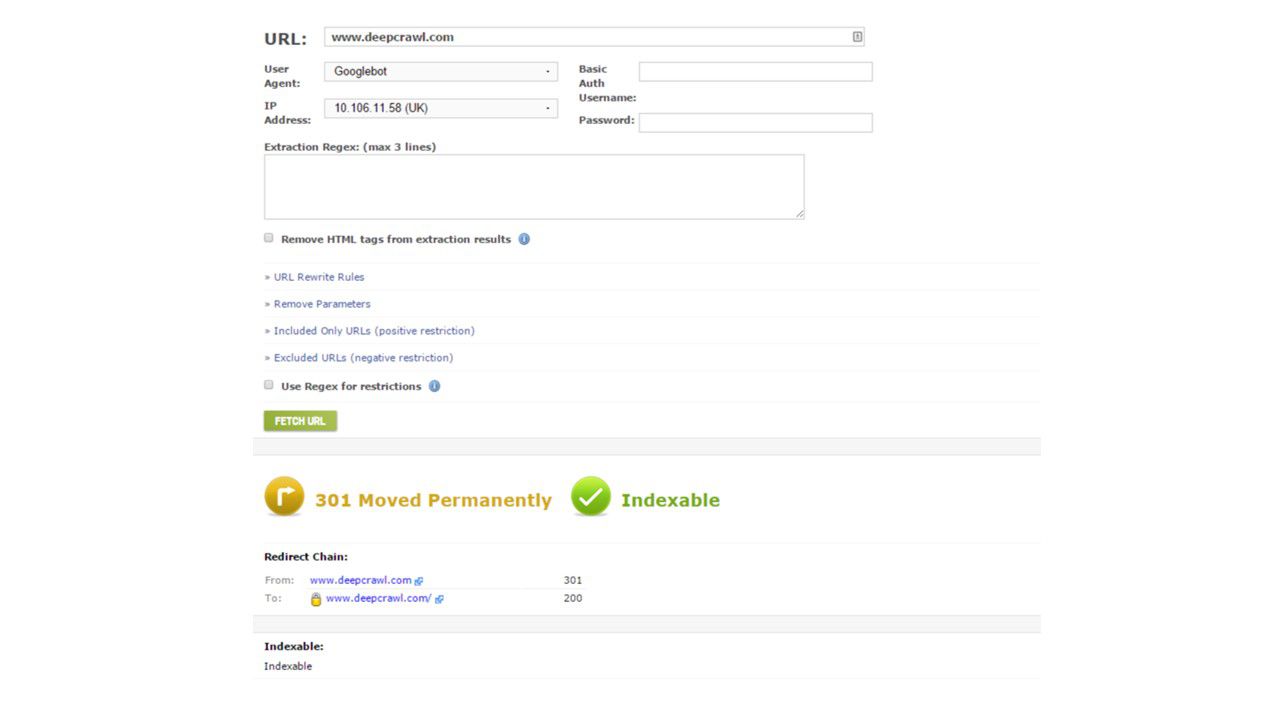

However, to correctly determine the status of the page, it is best not to rely on your browser alone. A request from a web browser will come from your personal IP address, rather than that used by DeepCrawl or Googlebot, as well as using a separate user agent. Browsers also execute javascript by default, which can further result in inaccurate reports.

Instead, we recommend you use either Fetch as Deepcrawl, or a third-party tool like RexSwain or Websniffer to more accurately ascertain the page’s status. Below, you can see how Fetch as DeepCrawl displays a detailed analysis of a page’s status.

If you find that these pages aren’t loading, or are returning a message such as ‘The connection has timed out’, then the issue is with the website. In this case, it is best to contact the webmaster or site owner, and let them know that there is an issue.

On the other hand, if you find that these URLs are working fine, then the issue might be with the way the crawl is being run. It is worth bearing in mind that issues such as temporary problems with the page itself may also return errors such as these.

Solving the Issue

The rate of the crawl can sometimes cause errors by overloading the server. If you are seeing a large number of slow pages in every crawl, this is also an indicator that the problem is resulting from performance issues, rather than a result of being blocked.

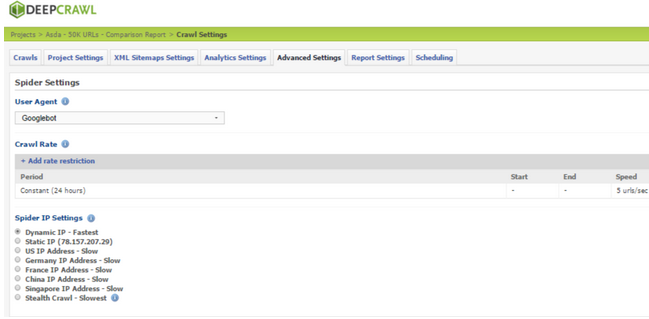

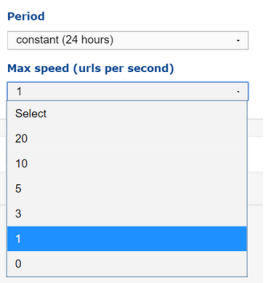

You can use other advanced settings to reduce the load on the site resulting from the crawl. This can sometimes solve connection error issues, and is done through the “crawl rate” section.

We’d recommend reducing the speed to 1 page per second, as for the majority of sites, this rate shouldn’t cause any problems

If this does not rectify the issue, the next step should be to contact the development team, and check that the crawler is not being blocked.

You can learn more in our guide to diagnosing and solving blocked crawls.

The DeepCrawl Team