Recently, we did a series of mini interviews via Twitter, or as we like to call them “Twitter-views,” with SEO experts and influencers speaking at SMX West.

One of the questions we asked was, “What do you see as the biggest challenge in SEO?”

While the responses were varied, a large number of answers (35%) revolved around the ever changing “best practices,” and algorithm updates.

When some of the brightest minds in the SEO world are challenged by the best ways to go about SEO, it’s easy to see how less savvy SEO’ers could easily get confused.

There are many blogs, websites, and webinars devoted to SEO, yet much of the information and “best practices” out there are outdated and ineffective. With the constant updates to Google’s algorithm, and the evolution of how people search online, many of the old go-to SEO tricks simply do not work (some of them never did).

While many of these old SEO best practices, won’t harm your site’s ranking, efforts directed toward implementing them are futile, and consume valuable time that could be spent more efficiently optimizing.

Avoid These 19 Outdated SEO “Best Practices” and Focus on Tweaks That Will Actually Improve Your Site’s Performance

1. Meta Keywords Tag

Contrary to popular belief, the meta keywords tag does not play a part in search engines ranking your page. Avoid it, as competitors can quickly discover your keyword strategy.

2. Image URLs in Google News Sitemaps

Google News sitemaps do not support images. While they may be fine in XML sitemaps, normally, it’s best to leave them out of Google News Sitemaps.

Try using og:image or schema.org if you want to display a specific thumbnail image alongside a Google News article. Google recommends this tip.

3. Over-Linking

No. Just stop. While backlinks were very important in the past, these days savvy SEO’s know quality is better than quantity, and only a select few will do. Google Penguin algorithm update, made links with authority and value important for ranking, while the number of links matter less. Stop scrolling to find pages to link to, and instead focus on key links that add value to your content.

4. Canonicalized URLs in Deep App Links or Hreflang

Warning! If your hreflang tags have canonicalized URLs search engines may not be able to crawl them. When using canonical URLs, they should only be placed within deep app links or hreflang tags.

5. Robots “Index” Directive & “Follow” Tag

When you use a “follow” tag, or an “index” directive, you are redundantly telling the search engines to act in a way they would normally. Therefore, you’re just junking up the code on your site, and wasting time if you add these directives and tags.

6. Not-So Sneaky Keyword Placement

Oh the glory days – the days when blog posts and pages could be found littered with keywords and key phrases repeated over and over and over.

Those days are gone, and good riddance! Google uses knifty latent semantic indexing these days, allowing their crawler to “understand” word and phrase context. If you’re still focusing on the exact words you’re trying to rank for, start thinking outside the keyword stuffing box and grab a thesaurus.

7. Crawl Robots Delay

While Google doesn’t use robots delay, you might still want to try it for bing. If you want to limit the crawl rate of Googlebot, you can do so through Search Console > Site Settings > Crawl Rate.

8. Prevent Indexing with Disallow

A page is indexed even when disallowed. Search engines won’t be able to crawl it, but it will still appear in the site’s index if any other page links to it – whether internally or externally.

9. Sitemaps for SmartPhones

SmartPhone compatible pages are not used with mobile sitemaps, as mobile sitemaps are only used for feature phone pages. Use a normal XML sitemap, with desktop URLs only, and smartphone URLs as rel=alternate, if using separate smartphone and desktop URLs.

Find out more from this Nov. 2014 Webmaster Hangout.

10. Ignoring Social Media

While it may be difficult to understand the value of #ing and “liking,” social media as an indicator of site validity is tangible, and shouldn’t be ignored. Building up social profiles gives Google and other search engines indications that people like and are interacting with your site.

11. Visible PageRank

In October 2014, Google indicated that public PageRank values would not continue to be updated. In fact, they haven’t been updated since October 2013.

12. Reconsideration Requests for Algorithmic Penalties

When it comes to reconsideration requests, Google notes that they should only be sent in when a site has been affected by a manual action:

“If your site’s visibility has been solely affected by an algorithmic change, there’s no manual action to be revoked, and therefore no need to file a reconsideration request.”

A Google Penguin algorithmic penalty, due to link spam or other spammy practices, will only be lifted at the time of the next algorithm update – if the issue has been removed.

Search Engine Land has a handy guide for discovering and fixing algorithm penalties.

13. Disallow and Meta NoIndex

Combining disallow with meta noindex is often used (incorrectly) to keep pages from being indexed and crawled. This is not efficient in that the page has already been disallowed in robots.txt – ensuring search engine crawlers will not crawl the page anyway.

14. Change Frequency and Priority in XML Sitemaps

Google’s John Mueller has suggested that Google does not often use Change Frequency and Priority in XML Sitemaps. Instead, he advises to submit the change date. Head to the “last modified” date, lastmod tag, to complete the change.

15. Spammy Comments

While comments used to play a role in link building, today spending time commenting with links to your site is a futile task. Leaving random links in comment sections won’t help your SEO, as Google’s introduction of the “nofollow” tag means site admins can direct crawlers to ignore links in the comment section.

16. Sitemap URL in Robots.txt

In your Robots.txt file, the sitemap URL must be absolute. Relative sitemap URLs are ignored by search engine crawlers.

Test robots.txt in Search Console. A warning with “invalid sitemap URL detected; syntax not understood” will appear if there is a problem.

17. Site Submission

Google, and other search engines, are very good at indexing websites. If you build it, they will come, or something like that… no need to submit your site to Google, they’ll find you.

18. Site:

A URL can be counted in a site: query, even if it has been noindexed or includes parameters removed with the parameter removal tool. If you try to use site: to check index count, the data could be misleading.

To see how many pages of your site Google has indexed, try checking the “Index Stats” and “Sitemaps Index Count” in the Search Console.

You can confirm which version of a URL has been indexed by Google with an info: query. Google’s John Mueller notes that this method is “a rough way to check which URL we’re actually picking up for indexing.”

19. Vary HTTP for Mobile

It is only necessary to use Vary HTTP header when your page content dramatically changes. Serving different mobile content on a dedicated mobile URL with dramatic content changes, based on user-agent, will not need the Vary HTTP header.

For more on this, check out this Webmaster Hangout.

Now That You Know a Bit More About the SEO Tips to Avoid, We Can Show You Where to Get Started.

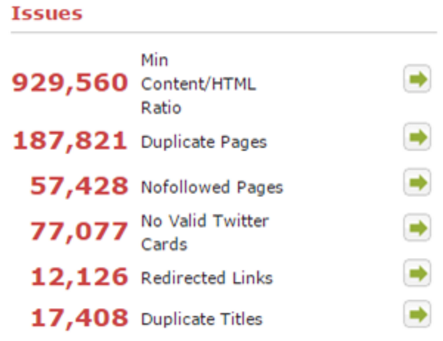

Check out DeepCrawl’s internal ranking system, DeepRank:

DeepRank is a measurement of internal link weight calculated in a similar way to Google’s basic PageRank algorithm. Almost all of DeepCrawl’s reports are ordered by DeepRank – so high priority issues always are on top.

Happy Crawling!