BrightonSEO gets bigger and better every time, and consistently draws some of the best speakers out to the English seaside for some serious knowledge sharing. For BrightonSEO September 2018, alongside sitting in on some excellent talks, the SEO community also gathered together to have some drinks and nostalgically enjoyed some classic gaming with a Street Fighter and Mario Kart tournament at the DeepCrawl pre-party.

We were also happy to help out conference attendees with some ping pong, air hockey and free beers in our beloved beer garden to take the edge off. Thanks to everyone who stopped by!

Free beer? Thanks, @DeepCrawl #BrightonSEO pic.twitter.com/6z5bq4dnHK

— Nat (@__nca) September 28, 2018

Martin Splitt – Building search-friendly web apps with JavaScript

Talk Summary

Martin from Google gave advice on how crawling works across the different JavaScript rendering strategies, as well as behind-the-scenes information on how Google renders content and where you can make the search engine’s job easier.

Key Takeaways

How do pages get indexed?

When using different web strategies for content delivery, such as lazy-loading, how can SEOs know if Google can actually see our content? Martin sought to clear up this confusion by explaining how the indexing process works.

Googlebot is basically a program that runs a browser. Here are the basics of how it works:

- Opens the browser and goes to particular URLs.

- Extracts content from pages.

- Content is put into the index.

- During the indexing stage, it will follow links on a page.

- Information found from following links will be passed back to the crawler.

If you don’t want pages to be indexed:

- Put them in the robots.txt as the first line of defence.

- Use the robots ‘noindex’ meta tag.

Duplicate content shouldn’t be indexed, so use the canonical tag to help Google decide which URL to pick. Without this, Google will have to figure out the preferred URL themselves. To learn more about helping Google pick the right canonical page, take a look at these slides on the topic.

However, when using single page applications and JavaScript frameworks, the crawler can struggle to extract content from your site. Google is essentially flying blind as it can’t see the content it needs to add to the index.

How rendering works with Google

For classic web architecture:

- Templates and data are combined when finding content, which is then rendered and parsed in the browser.

For client-side rendering:

- The templates are transported to the browser, but the browser is confused about what to show initially.

- JavaScript starts running and being parsed after this, so there is a delay in the content being shown.

- This delay has a big impact on users.

For server-side rendering:

- The templates and data are still separate, but rendering happens on the server to then ship to the browser.

- Additional JavaScript can be added to run client-side for more interactivity for the user after the key content has initially rendered server-side – this is called hybrid rendering.

Rendering is deferred until Google has enough resource, which results in a second wave of indexing specifically for content that needs to be rendered. This can happen from minutes to days after the initial crawling and indexing of HTML content. For more information on rendering, take a look at the recap of our webinar with Bartosz Goralewicz all about JavaScript considerations for SEO.

The lesson: don’t rely on JavaScript if you want your frequently-changing content to stay up-to-date in the index.

Dynamic rendering as a solution

Martin described dynamic rendering as a “band-aid” to help website owners get their content indexed and get around the complications of traditional JavaScript rendering.

Here’s how it works to help your content be seen and indexed:

- You can run HTML and JavaScript into a renderer which renders on the server.

- The rendered content is sent as static HTML back to the crawler.

- Note: this should only be used for your most important content, not additional ‘bells and whistles’.

Google should be publishing developer documentation this week, so keep an eye out for it.

You can use Puppeteer and Rendertron to implement dynamic rendering.

.@g33konaut is presenting the JS / SEO situation very well and honestly #BrightonSEO pic.twitter.com/QDmwg80Fnm

— Malcolm Slade ¯\(°_o)/¯ (@SEOMalc) September 28, 2018

What to watch out for with rendering

- Fragment identifiers: Don’t abuse the fragment identifier (# or #!) to render original content. This is a hack that existed before anything else like the History API.

- Soft 404s: Google is seeing a lot of soft 404s for single-page applications where the browser gives a 200 status when requesting the page, but the content says there’s nothing here. Google Search Console will warn you about this. Use HTTP statuses 404, 410 or 301 if the content is actually gone – the recommendation from the browser needs to line up with what’s on the page.

- Service workers: When using service workers (e.g. for PWAs), they can provide content without the server even being involved. JavaScript can make it look like indexing is working, but this might not be the case. Make sure your server is still configured correctly.

- Onclick elements: Stick to <a> tags with href as this helps people browsing with assistive technologies as well as search engine crawlers.

- Accidental noindex: Don’t use JavaScript to remove meta robots tags like noindex, and avoid sending conflicting messages in the HTML vs the rendered content. For example, Google won’t render a page that you’ve told them not to index in the HTML in the first place, so any instructions to remove the tag via JavaScript won’t be seen.

Testing how your site renders for Google

- Use the mobile-friendly testing tool, as this shows you the rendered HTML source code so you can check that everything displayed makes sense and is showing properly. As an added bonus, this tool also helps you optimise for mobile traffic (Google is currently working on making the screenshot of your site in the tool scrollable).

- Use the Google Search Console URL inspection tool and live test feature. The index caches things so it’s sometimes hard to see if something has worked or changed, so these new features allow you to see how Google can handle your site in real-time.

Martin also revealed that Google is working on improving its rendering service to update its current use of Chrome 41. This will be a long and complicated process, however, so for the time being check the official documentation to see what Google can and cannot do with rendering and the limitations of Chrome 41 to consider.

Jamie Alberico – SEO for Angular JS

Talk Summary

Jamie from Arrow Electronics explained why Angular JS is a useful tool to help you build great websites, as well as how you can test it to make sure it is complimenting your SEO strategy, not harming it.

You can view Jamie’s slides here.

Key Takeaways

What is Angular JS?

Angular is like ‘code lego’ with modular components that let you scale across JavaScript applications. It’s also open source which means sharing and collaboration between developers and SEOs.

You can think of it as a pop-up book, only a few lines are needed as they can create a whole website with just a template and some data.

The downside of Angular is that it strains the server, which is needed to assemble the website from the lines of code. However, once templates are loaded they don’t need to be reloaded so this is a one time job.

“If google is a library, a website is a book & a site with #angular is a pop up book” – this is the best way I’ve heard anyone describe angular. Quote from @Jammer_Volts during @brightonseo #BrightonSEO #javascript pic.twitter.com/PfCHnN6Pa8

— JP Sherman (@jpsherman) September 28, 2018

The problem with rendering

Before the announcement at Google I/O, we used to think of crawling and indexing as one instantaneous moment. However, the news of there being a second wave of indexing for JavaScript-rendered content shook everything up.

Now we know that JavaScript-powered content will be rendered and indexed by Google “whenever resource becomes available,” but what does this mean? Days? Weeks? Never?

Also, if the user doesn’t have JavaScript enabled in their browser or things go wrong (which can often happen…) websites can break for search engines and users.

Jamie found a workaround for this by testing moving key content to be rendered in the back-end, which significantly improved indexing.

How to tell if search engines can see your content

So, if your pages use JavaScript, can search engines actually see your content? The evidence says: probably not.

Here’s what you should do to make sure your content is seen:

- Timeouts often happen when rendering JavaScript, so check the ‘Blocked Resources’ report in Google Search Console to identify these issues.

- Use Angular Universal because it doesn’t rewrite your code and helps with server-side rendering. It creates beautiful, fully-parsed content!

- Use pre-rendering services, but be warned that the pre-rendering services can own you. Be wary of your codebase and what you rent vs own.

- To implement pre-rendering, adopt Puppeteer or Rendertron.

Other tips on working with JavaScript

- For analytics tracking: Virtual Page Views are supported in Google Analytics. This shows you views on pages powered by JavaScript without having to rely on the server to refresh, which is how page views are normally seen.

- For your SEO tool belt: You’ll need visibility, diagnostics and iterations.

- Structured data rendering: Parse structured data in your HTML and use JSON-LD. Don’t split structured data between the HTML and DOM, keep it all just on one.

The main message that Jamie wanted us all to take away, was to iterate and test your projects, be open-source, then share what you’ve learned with others in your industry.

Marie Haynes – Super Practical Nuggets from Google’s Quality Raters’ Guidelines

Talk Summary

Marie shared her expertise on search engine algorithms as well as giving insights into how to improve website quality, and, therefore, rankings by looking at examples from the documentation within Google’s Quality Raters’ Guidelines.

Key Takeaways

What do Google’s Quality Raters do?

First of all, Marie recommended setting aside some time to go and read Google’s Quality Raters’ Guidelines (QRG) because there is so much useful information in there to help you think about how to improve the quality of your website for users.

The Quality Raters at Google are different from the webspam team. They won’t give you a penalty for quality issues, but they will review your site against the quality guidelines they are set. This information is then fed back into Google engineers who will tweak the algorithm as needed to make sure the highest quality sites are being given the most visibility.

This indirectly affects rankings because the algorithm mimics what is seen and judged against the QRG.

Reputation as a ranking factor

The reputation of websites and businesses is emphasised throughout the QRG, even though there’s only so much you can do about this as an SEO. The Quality Raters are told to flag issues where there are even mildly bad opinions of a site externally. They are also told to look out for negative reviews as these are a sign of very low quality. Businesses will be rated F for this out of a scale of A+ to F.

If people online are saying that they don’t like a business, it will be harder to rank well.

To address this issue, look at the quality of the reviews that have been left for your business. Are half of them negative, and, to make matters worse, are most of your competitors’ reviews positive? If so, this will certainly affect your rankings.

How Google’s algorithm distinguishes between high and low-quality content – @Marie_Haynes #BrightonSEO. Having comments on the website is a good thing, if moderated! PS: Like the GIFs pic.twitter.com/NNdKfnUKOE

— Cindy Heidebluth (@cheidebluth) September 28, 2018

The August 1st update & E-A-T

Marie has seen that most of the sites affected by the August 1st update had issues with E-A-T (Expertise, Authoritativeness and Trust), particularly bad reviews and trust as many of them had received user complaints about their businesses. In the QRG, low-quality pages are defined as having low E-A-T so these factors do play a part in influencing the algorithms.

If you want to know how to improve your site’s quality E-A-T, Google’s Gary Illyes suggested that this is influenced by:

- Good content

- Links from high-quality sites

- A thriving community

I asked Gary about E-A-T. He said it’s largely based on links and mentions on authoritative sites. i.e. if the Washington post mentions you, that’s good.

He recommended reading the sections in the QRG on E-A-T as it outlines things well.@methode #Pubcon

— Marie Haynes (@Marie_Haynes) February 21, 2018

Sites with quality user-generated content (UGC) have been shown to rank highly because they feature the personal experiences of their users, and this is helpful content for others. This means you should add more high-quality UGC to your website because useful and genuine comments are the sign of a healthy website.

DYK quality comments can be a signal of a healthy website? pic.twitter.com/Yugh9AhBWz

— Gary “鯨理” Illyes (@methode) March 10, 2017

Ways to improve the quality of your website from the QRG

- Find authority sites in your space and get them to mention you.

- Produce content that people want to link to and mention.

- Have a Wikipedia page as this shows importance.

- Clearly communicate the level of expertise of the authors on your site.

- Include resources and references where possible as a pop-up or at the bottom of a page.

- Make sure you aren’t forcing users straight into sales funnels without providing them with the information they came looking for – Google will stop showing sites that aggressively sell to people.

The key message that Marie wanted us to take away is to be authentic with our websites. SEO is about building trust, not links. People can see past what is fake, and Google is getting better every day at figuring out which sites are the ones that help people the most.

Luke Sherran – Video ranking factors in YouTube

Talk Summary

Luke from Falcon Digital examined the key ranking factors for making videos appear more prominently in YouTube, and how to maximise opportunities within the world’s second largest search engine.

Key Takeaways

The opportunities within YouTube & video marketing

Behind Google, YouTube is the second largest search engine in the world. That means there is a huge amount of opportunity to tap into.

The best way to do this is with an integrated approach to video and SEO – don’t just create a video without knowing how to promote it or what to do with it. It’s very difficult to reverse-engineer a marketing strategy around an existing video.

YouTube’s top 5 traffic sources

- Notifications: These are initially sent to around 10-15% of your subscribers when you publish a new video.

- Seeding: This is external traffic through social shares, external embeds, email marketing etc.

- Organic Search: This makes up around 20-40% of YouTube traffic.

- Related Videos: You can get traffic from bigger, more established channels.

- Browse Features: Great content has its reach extended by being added to the homepage as a featured video in the ‘Trending’ section, for example (you’ll know when this happens because you’ll see a huge spike in views!)

How YouTube’s algorithms work

YouTube is always experimenting and adding to its many different algorithms rather than changing them. This means the fundamentals always stay the same.

The algorithms are influenced more by machine learning and viewer satisfaction than metadata because there is more to be learned from user behaviour than anything that can be manipulated or written by brands themselves.

These are the key metrics to optimise for:

- Watch time: This metric is very hard to gamify.

- Session starts: Starting a new session is a positive metric and ending a session is a negative one.

- View velocity: Within the 1st hour and 24 hours after publishing, the more views your video gets, the more powerful signals are sent to the YouTube algorithm.

- Social signals: Likes, dislikes and shares all point towards quality which helps in deciding which are the best videos to serve to viewers.

- Video quality: This will heavily influence viewer satisfaction.

The YouTube metrics you should pay attention to from @lsherran

⌚Watch time

▶️ Session starts

View velocity

Social signals

Video quality

Top stuff.

— TelegraphSEO (@TelegraphSEO) September 28, 2018

Luke gave this example looking at how thumbnails in particular feed into the algorithm as a ranking factor:

- This is done through a process called collaborative filtering.

- YouTube will group viewers together based on their attributes (e.g. gender and age) to predict their behaviour.

- Different thumbnail options will be tested and the reach will be extended for the ones that get clicked the most.

- The thumbnail options that don’t get clicked will be demoted.

- This whole process is dictated by quality – low quality, non-engaging videos can disappear from YouTube as they continue to not be clicked and slip down the rankings.

Best practices for uploading videos

Tick all these boxes for video optimisation:

- Titles: Must be interesting with a hook – include keywords but not at the expense of a good title for the user.

- Descriptions: Include keywords within the first paragraph, but this is a weak signal.

- Tags: These have a small ranking correlation but they are used for assessing relevance.

- Captions: Add these manually, or to save time, edit the auto-generated ones to make them accurate.

- Playlists: A playlist with a well-optimised title and description seems to pass on relevance to the videos within it.

- Upload Publish Time: Post videos when they’ll be most viewed or upload them at regular intervals so your viewers will come to your channel expecting new content.

Matt Siltala – Creating the Best Content in the World

Talk Summary

Matt and his company Avalaunch Media produce a lot of creative content for some of the biggest global brands, so he shared his own experiences on how to make the most out of visual content.

Key Takeaways

These are the points that are most often raised by clients:

- “We need to create more content.”

- “We need to be on all social media.”

- “I hear we need to be creating infographics.”

- “I hear we need to be creating video.”

But don’t get trapped in this black hole, you need to do these things but only in the right way. Stop thinking about likes and links – instead, figure out what the purpose will be for each piece of content (lead gen, brand awareness etc) as well as what problem it solves.

“Don’t get trapped in the black hole of wanting to create more types of content and being on all social media networks. Every content piece you make should have a purpose.” – @Matt_Siltala #contentmarketing #brightonseo pic.twitter.com/AcEj6u6b27

— Sendible (@Sendible) September 28, 2018

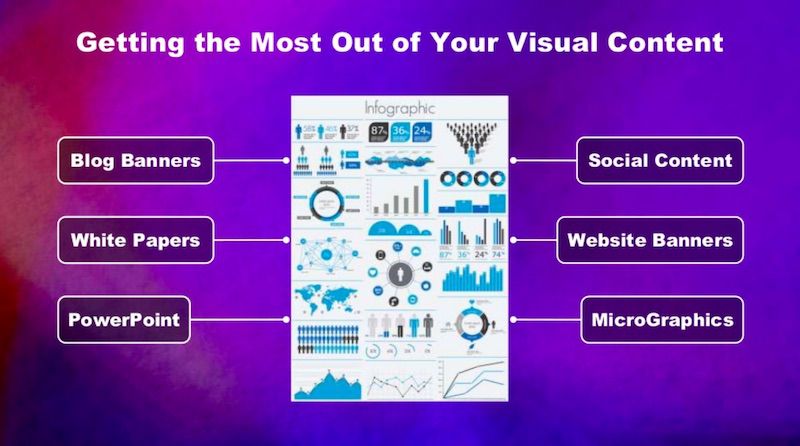

How to utilise infographics

Think about the different ways you can use and repurpose the asset of an infographic as you’re planning and creating it.

Here’s a checklist of how to best use an infographic at every stage:

- Create the infographic.

- Once it’s been approved, design an ‘infogram’ with the key stat or CTA (snippets of the infographic for Instagram).

- Deliver the infogram across Facebook, LinkedIn and Twitter.

- Create a quick snippet or video (‘motiongraphic’ based on the key stat or CTA chosen by the client.

This gives your audience something much easier to consume. The best way to get the most out of your visual content is to design it with ways to cut it up and take out small sections.

Matt gave an example of how his team’s ‘Social Meowdia’ infographic continues to be one of the most-shared pieces of content they’ve created to date. Its success depends on expanding and repurposing its content. It started with the social media channels as described by cats, and was then repurposed into a version featuring dogs which helped to raise a lot of money for the Humane Society.

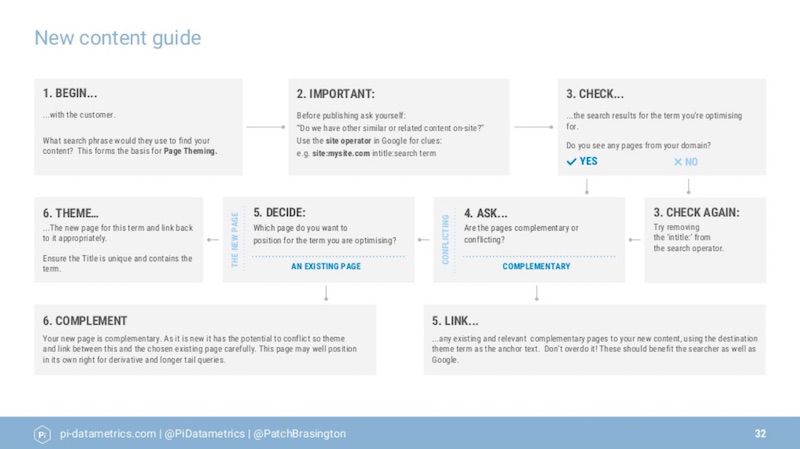

John Brasington – Contextual optimisation: How to create value led content for your ecosystem

Talk Summary

John from Pi Datametrics talked about how to ensure that new content adds value to the fragile ecosystem of your existing website, and how new and old content can be optimised to compliment one another.

Key Takeaways

As SEOs we are privileged because we have access to keyword data which is a window into the mindset of users. We can use this information to make it easier for them to find useful information.

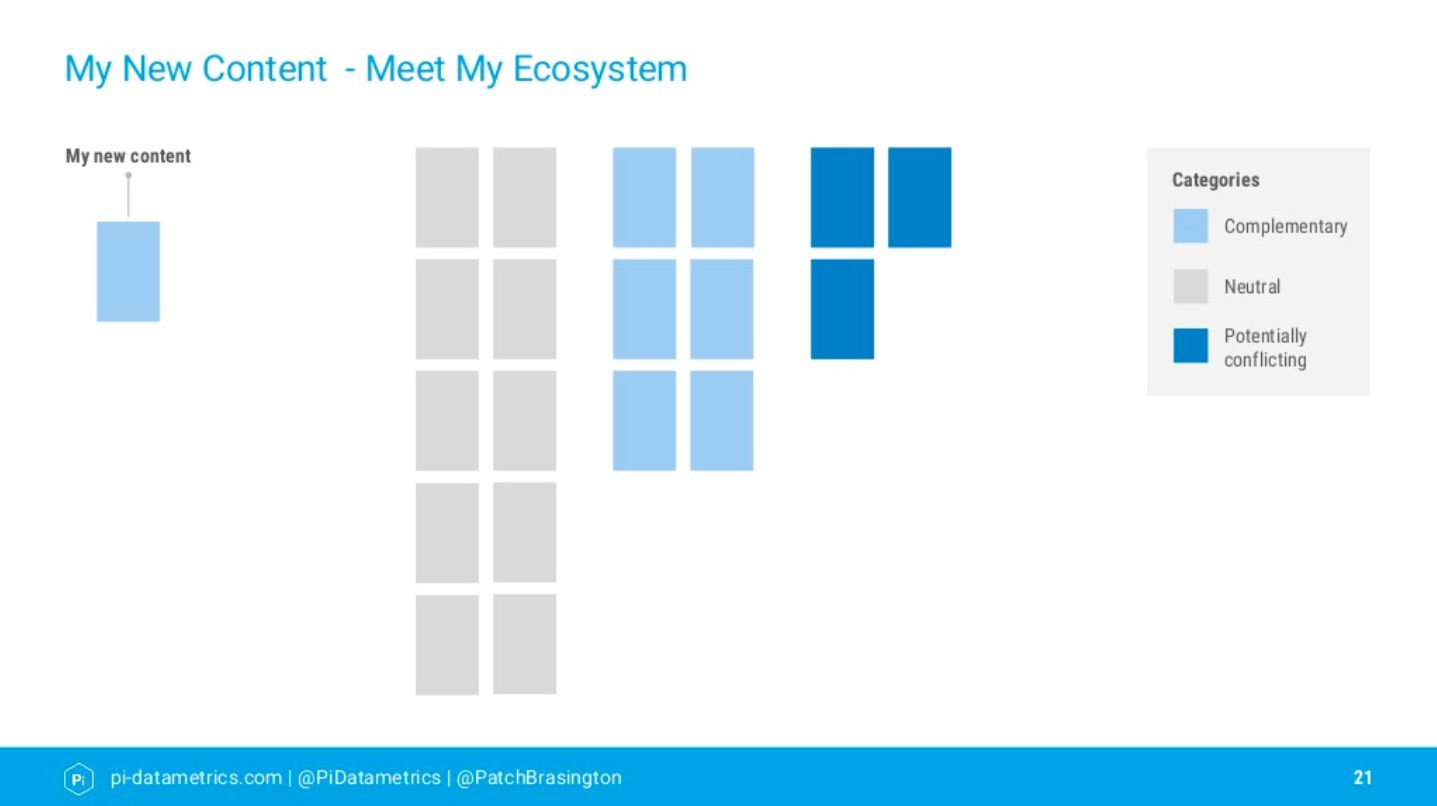

Contextual optimisation & your site’s ecosystem

Contextual optimisation is based on understanding your website’s ecosystem and getting results from your content. Start by analysing the SERP ecosystem and which features appear for the keywords you want to target. Then you can get ahead of your competitors by optimising for these features.

Then categorise your own site’s ecosystem and optimise it by making connections between the different content on your website. Before new content is created, stop and think about how it will impact your ecosystem, namely site architecture and the customer journey. If new content isn’t connected in the same way, it might conflict with the context and topic connections that already exist.

How to optimise your site for new content

- Start by categorising your content: Look at what’s complimentary, neutral or potentially conflicting, as well as what’s supporting or duplicating existing content.

- Set context: Decide which page is the most appropriate and valuable doorway into your site’s world for each term.

- Make contextually relevant connections via links: Do this with user and search engines in mind.

Adding appropriate linking between complimentary pages transfers information and understanding between pages to create a better-flowing user journey.

Figure out your site’s entity groups by using the ‘site:’ operator to find relevant pages by topic e.g. ‘site:cnn.com “mueller case”’. This is the basis for categorising.

If you have conflicting articles, the keyword will probably be in the title, so refine this query to ‘site:cnn.com intitle:“mueller case”’.

Start understanding your content as an ecosystem and not as isolated instances. Understanding how everything connects together will positively impact revenue growth and visibility.

Meg Fenn – Using stunning design to leverage your SEO

Talk Summary

Meg from Shake It Up Creative explained how design can be used to create better user flows and effectively communicate more impactful messages and sentiments to your users.

Key Takeaways

Creating a better user flow with graphic design

SEO is about communication – we have to get things across to users clearly and effectively. Similarly, the main focus of graphic design is communication which inspires people to take action.

Graphic design evokes, communicates and converts. This is because it invokes trust, and when people trust, they buy.

UI, UX and collaboration are the 3 key ways in which you can bridge the gap between design and SEO for a better flow between projects.

How design aids conversions

- Design and layout improve UX which improves rankings.

- Visual indicators (buttons etc) on a website are really important for making an impact on users and getting them to convert.

- Design contributes to the popularity of websites and how long people stay on them. Beautiful websites encourage us to explore further.

- Design creates something that resonates and emotionally connects – this results in engagement, conversion and customers sharing your site and its content.

How to design a website for trust

- Use high-quality photos.

- Use correct spelling.

- Use official brand assets.

- Site is fast to load.

- Site is accessible across devices.

These elements all build trust with users.

Also design for accessibility, such as using contrasting colours for people with colour-blindness (see WebAIM and ColorCube to learn more).

Tips for optimising your assets for conversions

- Reduce image file size to improve page load time. Use Photoshop, Compressor.io or ImageResize.org.

- Use CSS and HTML to optimise images onsite – style images as one block so you have one image to upload rather than separate tiles.

- Use CSS ‘clip path’ for interactivity.

- Use image sprites for fewer image requests.

- Use SVG to build images directly into the source code.

Designing for mobile-first

Brain training is required because we’re so used to starting with desktop and going smaller down to mobile to make sure everything renders and appears correctly. Mobile mockups and designs shouldn’t be an afterthought any more, use Adobe XD, Adobe Spark and Bootstrap.

Mobile-first indexing helps (or forces…) SEOs, developers and designers to work better together to serve the best experience for mobile.

To learn more about mobile-first indexing, make sure you read our white paper on the topic.

More BrightonSEO September 2018 news still to come

We hope you found this recap useful! There were too many brilliant talks to be able to include them all in one post, so take a look at part 2 of our recap which will include even more talk takeaways. Also, our summary of the keynote session with Rand Fishkin can be found here.