Programmatic SEO is a trending concept that continues to attract the attention of SEO professionals in 2021. Programmatic SEO is the answer to large-scale content challenges.

There are core benefits that programmatic SEO brings to the table, such as:

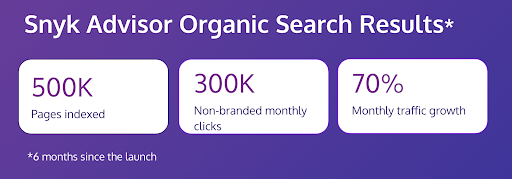

- Impactful results – the potential results will be immediately scalable. In the case of the app my team built, after launch, we achieved 300K non-branded clicks a month in less than 6 months.

- Scaling fast – by using data and an SEO-friendly structure, programmatic builds allow us to dynamically develop thousands of pages.

- Backlinks – the great part about owning a programmatic asset is that it will continue to bring backlinks to your domain because it matches a long-tail keyword intent with tailored content.

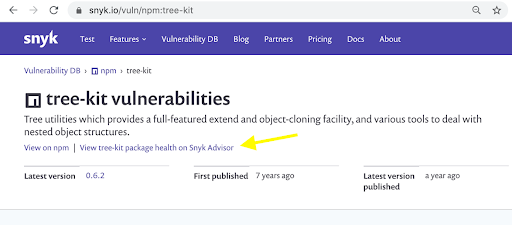

This article provides actionable insights based on my experience in building Snyk Advisor with my team, a programmatic app that helps developers make data-driven decisions when selecting open source packages. I’ll take you through the main challenges we faced while building a programmatic SEO asset from scratch, indexing 500K+ pages, and aiming to keep the content unique and avoid duplication issues.

What you will get out of this article:

- Knowledge from our experience

- Top consideration factors for your SEO team when building a programmatic app

- Case study results from my team’s app

Let’s start with some basics.

What is programmatic SEO?

Programmatic SEO is an execution strategy used to target thousands of long-tail keywords by building a large amount of landing pages at scale. For example, creating thousands of pages for “things to do in [city-name]” search terms. Programmatic SEO is highly reliant on developer resources needed to code the pages. Even with high scalability, it requires an initial investment.

After careful examination of all the benefits and challenges, we decided to proceed and build our own programmatic SEO asset – Snyk Advisor.

My Experience – Building a Programmatic Web App (Advisor)

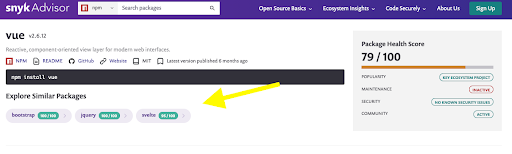

Advisor is a programmatic web app, built by my team. Its primary goal is to offer consolidated information about open source packages including health, popularity, and maintenance. As you might imagine there are thousands of open-source packages and covering them all requires a mentality of “how can we create scalable content?”.

Our team saw an opportunity in leveraging, processing, and aggregating data from different sources to provide a more holistic picture of open source packages. We also found that developers wanted answers, such as: “should I use this package?”, “is it secure?”, “Should I use something else?”, etc. And this need was not being served on the web. So, we sought out to build a portal that provides data that answers those questions on one page.

And, by our benchmarks, it’s been a success.

Now let’s dive into how you can use our results to translate to your SEO programs.

Getting started: Planning and structure

Prior to tackling the challenges, it is important to identify the opportunity and make sure that there is the right intent behind it. There are two main steps, including identifying:

- Head terms – high volume, category level keywords.

- Example: hotels, gyms, restaurants, etc.

- Modifiers – keywords used along with head terms to target long-tail keywords.

Example: descriptive [cheap], [healthy], [fancy], location [city], [country], etc.

It is important to do competitive research and understand whether what you plan will fit the search intent of the user, while also being competitive. The next step would be to plan the structure of your programmatic asset.

4 programmatic SEO challenges we faced (that you probably will too!)

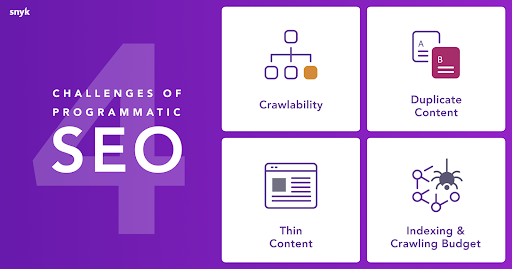

The process of designing and launching our app took several months and here are the main challenges that were faced along the way:

Summary of our challenges:

- Crawlability – getting the pages naturally discovered and crawled by Googlebot.

- Duplicate content – can be a serious issue when you try to build something at scale, following the same pattern and at the same time staying unique.

- Thin Content – the majority of programmatic assets are built on images, graphs, and other data points that are missing descriptive text that has a massive impact on your discoverability and ranking on Google.

- Indexing & crawling budget – making sure that all your most important pages are indexed first, enabling high pace crawling and indexing by constantly monitoring and fixing errors and page performance issues on time.

Let’s talk through each of these challenges one by one.

Crawlability

Crawlability is one of the most important issues large site owners should address. When you have thousands of pages, it is critical to prioritize which pages should be discovered by search engines. Also important is establishing an internal linking pattern that lends itself to strong information architecture.

The question you should ask yourself here is “How will you ensure that Google’s search engine bots can find your pages?”

Here are 4 ways you can do it:

1. Submitting XML sitemaps

This is an efficient and reliable approach to making sure your pages get crawled. Keep in mind that the recommended amount of links per sitemap is under 50,000 and make sure you upload the sitemaps from GSC as well as link them from your robots.txt file. However, when we talk about thousands of pages, you shouldn’t solely rely on the sitemaps. You want to make sure that newly added pages are being discovered organically without reliance on manual submission.

Tip: Make sure to include only indexable, 200 HTTP response code URLs to the sitemaps you submit to Google. Run a test crawl with Screaming Frog to eliminate 3xx, 4xx, and 5xx errors. It is much easier to resolve these issues before launch. Keep in mind that multiple errors directly affect a site’s crawl efficiency.

2. robots.txt file

Also known as Robot Exclusion Protocol is one of the first places on your site that search engine bot will visit.

You should use it to:

- Link the XML sitemaps files

- Allow or disallow specific web robots (such as scrapers)

- Prevent robots from crawling the specific sections of your site

Note: disallowing bots from crawling a section on the site does not prevent it from being indexed. If someone on the web links to the page directly it might be displayed in SERP. If you don’t want your page to appear in SERP, use noindex or password protection.

3. Internal links

Solve two problems at once: helping you to avoid orphan pages and allowing googlebot to navigate from page to page naturally discovering new pages. The best way to approach it is to think of a pattern that will work on each page and will have a broad coverage.

4. Creating HTML sitemap

It is preferable to create a user-friendly sitemap that has a logical structure behind it, for example pages grouped by categories or other criteria. Make sure to include the most important pages first. Alphabetical order is a less preferable option because it doesn’t give you the room for prioritization and listing all pages won’t make much sense (especially if we talk about thousands of pages).

Incoming links from other pages on your domain as well as externally.

When designing our app, we leveraged multiple ways to improve discoverability:

-

Internal links pattern involves suggesting “related packages”

-

Inlinks links from other places on your site and other domains

Internally we’ve made sure that our Vulnerability Database pages link to Advisor package pages which covered 150K+ pages

We’ve also reached out to our partners to get them to link to Advisor pages.

Thin content

Thin content is sparse content that gives no value to the reader.

If you have little or no text on the page Google might treat it as a thin content page and interpret it as low value to the user which might significantly hurt your chances to rank.

Google still counts on text in order to understand and interpret page contents. While having the graphs and images is great for the user, we made sure to add descriptive text on the page to facilitate interpretation of the data.

Duplicate content

While trying to avoid the thin content issue described above it is very easy to stumble upon another problem – duplicate content.

Duplicate content is content that appears on the internet in more than one place.

To solve this issue we introduced a pattern that involved mentioning package name within the text on the specific package name page.

Indexation & crawling budget

Crawl budget is a hot topic when it comes to programmatic SEO and large websites. What is the amount of links that Google will easily crawl and when will you start experiencing a slowdown in indexation? If only we had known in advance! Yet, we have good news for you and optimistic numbers to back it up.

Our programmatic app has currently more than 500,000 pages indexed and all of that within 6 months since going live.

It is important to think through your going live strategy, create a list of the assets you want to index first, not to find yourself in the place where the most important pages were left behind. There is another important reason for indexing the best pages first: Google will assess the content quality, user signals (engagement, bounce rate, etc.), so proving the value of your assets from the start will positively impact the indexation pace and as well as rankings.

Tip: Create multiple properties in GSC for different [subfolders] of your programmatic asset. This will give you a full view of the coverage and issues per section without needing to export and filter the limited 1,000 pages sample.

SEO Tools

Related to the previous points, make sure that you’ve checked and optimized:

- Website and page performance (Pagespeed Insights, WebPageTest.org)

- On-page mark-up (SEO Minion Google Chrome Extension, and inspect)

- Website security (Vulnerability scanner)

- Crawlability, errors, and meta-data (SEO crawler)

- Monitoring (GSC and Google Analytics)

Is it worth it?

Short answer is yes! Building a programmatic asset is a big undertaking that requires a lot of research, planning and resources to create and none-the-less the results are worth it. In the digital era reaching hundreds of thousands of users within your target audience and building trust by providing value, is priceless. Make sure you invest enough time in planning, testing, and optimizing your asset before going live. And keep in mind that it will require maintenance and continuous optimization on-wards.

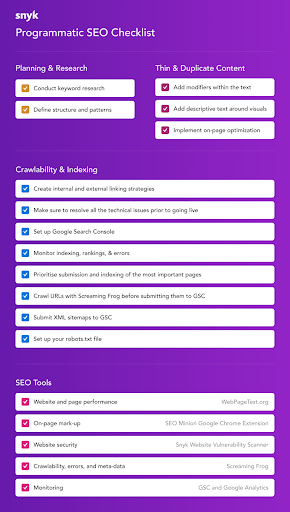

10 Key takeaways

- Planning and research is a key success factor. Think in advance how you will structure your asset, what will be the url, meta titles and descriptions pattern.

- Make sure to add modifiers and descriptive text on the page to avoid duplication and thin content.

- Create internal and external linking strategies.

- Make sure to implement the on-page optimisation and resolve all the technical issues prior to going live.

- Be wise about spending your crawling budget: prioritise submission and indexing of the most important pages first.

- Crawl URLs with Screaming Frog before submitting them to Google to make sure you only include 200 status code urls in the XML sitemap file.

- Set up your robots.txt file – link the XML sitemaps file, allow or disallow specific web robots (such as scrapers), prevent robots from crawling the specific sections of your site.

- Submit XML sitemaps to GSC and make sure not to exceed 50,000 urls limit per sitemap.

- Create multiple properties for different sub-folders of your asset to facilitate indexing, errors, and traffic tracking.

- The project does not end with going live. Make sure you continue to track and monitor the indexing, rankings, and errors as well and plan to have developer resources available to fix the technical issues (e.g., server error).