Why URL duplication could be harming your website (and how to stop it)

URL duplication occurs when multiple URLs return the same page within the same site, for example:

/news.aspx and /news.html

/contact and /CONTACT

/news?source=rss and /news?source=header

Many websites allow for the same page to be retrieved through different URLs, but from Google’s perspective these are different pages containing identical content.

So why is this a problem?

Forcing Google to crawl multiple versions of the same page reduces crawling efficiency and dilutes authority signals across the different versions of the page, which limits its potential performance.

Thankfully, there are ways to avoid the risks associated with URL duplication. First, let’s look at six common causes.

Is your site at risk? Common causes of URL duplication

1. Various different ways URLs can be terminated:

- /news

- /news/

- /news.html

- /news.aspx

- /news/home/

How do you avoid URL duplication?

2. Using uppercase letters inconsistently

- /news

- /News

- /NEWS

4. The same path repeated twice:

- /news/

- /news/news/

3. Reversing the order of parameters:

- /news?page=1&order=recent

- /news?order=recent&page=1

5. An additional path which is optional:

- /news/

- /news/home/

6. Duplication from tracking parameters:

Parameters are great for tracking and don’t change the content of the page, but each URL will return the same result, meaning they are duplicates:

- /news?source=header

- /news?source=footer

- /news?source=rss

The best way to avoid URL duplication is to always use a consistent URL format in internal links. Pick an internal link format and stick to it, and make sure everyone adding internal links to your website is following the same format.

What’s the best way to fix existing duplication issues?

If you already have duplication issues which have created many duplicate URLs, updating your internal links might not be enough to solve the problem, as once Google has discovered a URL, it will continue to crawl it after the links have been removed.

First, resolve the issues with 301 redirects, to direct users to the right version of your content and help Google index the right URL.

Then implement the following safety measures to prevent any duplicate URLs:

Implement a canonical tag on your pages to tell Google which is the preferred URL of the page.

Change your Webmaster Tools parameter settings to exclude any parameters that don’t generate unique content.

Disallow incorrect URLs in robots.txt to improve crawling efficiency.

To avoid any issues in the future, all internal links should be added in the same format. Educate everyone who has access to add internal links to your site on the risks associated with URL duplication, and ensure that everyone uses the same format.

Why implement all of these solutions at once?

Unfortunately, there is no single solution to solve all URL duplication issues. Each of the solutions have their own benefits but they also all have drawbacks, which means it’s best to implement them together to cover all potential issues.

301 redirects will avoid duplicate pages being indexed and consolidate authority signals, so are useful when resolving historical duplication issues.

However, redirecting internally-linked URLs will reduce crawling efficiency, so 301 redirects are only suitable to help clean up existing duplication issues. It’s best to update your internal links that point to redirecting URLs.

Lumar (formerly Deepcrawl) provides a report on every internal redirecting URL so you can update all the links to point directly to the redirected to URL.

Canonical tags will consolidate authority signals to the main page and resolve performance issues, but they won’t improve crawling efficiency because Google still has to crawl every URL to find the canonical tag. If you have a large number of duplicate URLs per canonical page, it can make the site uncrawlable, even with canonical tags.

Lumar provides extensive reporting on canonical tag configuration so you can be sure it’s working as intended.

Webmaster Tools settings will effectively eliminate parameter duplication issues, but they are only available for Google and Bing so can’t be used to resolve issues with sites like Facebook or Twitter.

Disallowing duplicate URLs in robots.txt will help to increase crawling efficiency as the duplicates won’t be crawled once they are disallowed.

However, this method will not consolidate any authority signals for the duplicate pages, as the duplicate pages cannot be crawled to determine how authoritative they are.

Lumar provides reports on every URL that has been disallowed because of your robots.txt file. You can test a new version of your robots.txt file using Lumar to simulate the effect.

How do you know if you have URL duplication?

Like all types of duplication, duplicate URLs can limit performance, so it’s important to identify and fix any issues.

But how do you know you’ve got an issue in the first place, and which URLs are causing problems? Google Webmaster Tools won’t show this information and it’s impossible to check every possible URL variation.

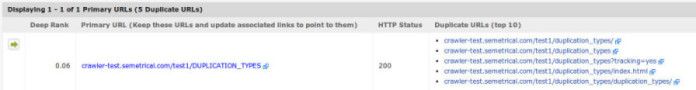

Simple: Lumar’s powerful duplicate pages report will alert you to URL duplication on your site, identify the primary URL of the page, and even show you which pages are duplicated so you can fix the issue immediately: