Welcome to the third part of our BrightonSEO recap for September 2019. If you’ve read the first and second part of our recap you’re in the right place for yet another helping.

As per usual the DeepCrawl team was sitting on many of the best sessions at BrightonSEO. Whether you weren’t lucky enough to attend this time around or want to find out about a talk you missed, we’ve got you covered with key takeaways, slides and recordings from some of the great sessions on show at BrightonSEO.

Luci Wood – Tips for optimising for Google Discover

Talk Summary

Luci’s corgi-filled talk took a look at the latest developments in Google Discover and provided us with actionable tips to improve our chances of being visible in this rising new vertical of search.

You can also read Luci’s post here which dives into even more detail on how to succeed in Google Discover.

Key Takeaways

What is Google Discover and where can I find it?

Google Discover is an AI-powered content discovery feed aimed at recommending more relevant content to users based on their interests and interactions on the web.

Discover is made for mobile and can be found on their mobile homepage and in the Google Search App as well as being available by swiping right from the home screen on Pixel phones. This relatively new form of content discovery now has 800 million monthly active users, which is four times as many as Amazon.

Google Search Console now has a dedicated report for the performance of your pages in the Discover. This allows you to track the usual performance metrics for pages like impressions, clicks and average clickthrough rate, but not rankings. In the Discover performance report you can see:

- Total traffic received from Discover.

- Best performing content

- How frequently pages are featured in Discover.

- The split of traffic from Discover and regular search.

It is important to note that Search Console won’t show every instance of your pages being shown in Discover. The performance will only be shown when the pages gain meaningful visibility.

How are pages ranked in Discover

Similarly to traditional search, the pages shown in Discover are ranked algorithmically based on what the user is likely to find most interesting. As such, Google’s algorithms place high importance on relevancy when deciding which pages to show in Discover.

How to optimise content for Google Discover

In order to optimise content and give it the best chance of being featured in Discover, Luci recommended:

- Shifting our mindset from keywords to people, focusing on topics and audiences.

- Learn about audience’s preferences using YouGov profiler, Google Analytics affinity categories, internal site search.

- Creating fresh and evergreen content to maximise the reach of your content.

- Understand your target audience’s level of expertise as Discover’s algorithms take into account a user’s level of expertise on topics.

- Look directly at the SERPs for target queries and see what appears for featured snippets, People Also Ask and autosuggest.

How to optimise your clickthrough rate for Google Discover

When your pages are receiving visibility, Luci had some advice for how we can optimise clickthrough rates in Discover:

A page with a good clickthrough rate will likely have:

- A concise headline

- Clearly outlined article content

- Eye-catching images

- Content that is closely aligned with user interests

On the other hand, a page with a bad clickthrough rate will likely feature:

- A long and wordy headline

- Alienating content

- Fake news, sensational content or clickbait

The visual side of Discover

Turning to the visual side of Google Discover, it is important to use images which have a minimum width of 1,200 pixels. This is because Discover utilises large images and not thumbnails, like in traditional search.

Google has found that large images can increase clickthrough rates by 5%, so using larger images could be an easy win. It’s important to note that you’ll either need to be using AMP or by giving Google permission to use high-resolution images from your site.

Technical requirements for Google Discover

In order for pages on your site to be featured in Google Discover, you’ll need to make sure pages are mobile-friendly, indexable and comply with Google News policies.

There isn’t any structured markup that you need to apply to pages in order for them to appear in Discover. It also isn’t required to have AMP pages to feature in Discover but this can help because of the fast experiences they can provide. AMP Stories are a relatively new addition that you might want to consider experimenting with to gain visibility in Discover.

Catherine ‘Pear’ Goulbourne – How to find content gaps when you don’t speak the language

Talk Summary

Catherine’s practical and enlightening talk showed us how to find content gaps and SEO content opportunities for markets you/your brand want to expand into when you don’t speak the language.

Key Takeaways

At Oban International, Catherine has been required to help businesses expand into different international markets. However, this isn’t a straightforward task, especially when you don’t speak the language of the market you’re trying to help a client enter into. Catherine took us through her process for how to find content gaps in new markets when you don’t speak the language.

Knowing the market you want to move into

Catherine started by driving home the point that if your business is looking to move into a different market, it’s crucial that you know that market first. This means understanding which search engines people are using in this new market.

Google won’t always be the dominant search engine in your target market, so you need to understand the split and how content is ranked by the preferred search engines. For example, Naver is popular in South Korea and has different ranking factors compared to Google, so your approach to SEO for this market is likely to be different.

Know who you’re up against

Once you’ve understood the search engine landscape of the target market, you need to find out who the competition is. This isn’t going to be the same for each country. Take Nike for example, their competitors are different in the US compared to Japan.

On top of this, you need to make the distinction between:

- Perceived competitors – Companies who you are competing with for business.

- SEO competitors – Websites that you are competing within the SERPs.

There may be an overlap between these two different types of competitors but these instances are the exception rather than the rule.

You can find your SEO competitors by using a tool like SEMrush, which will show you the websites that you have the most number of overlapping keywords with and they also show you a competition level metric. However, make sure you select the right country when looking at your SEO competitors because this will likely change from market to market.

How to find content gaps in new markets

The first step to finding the content gaps in new markets is to assess your existing content inventory so you know what you already have. This is a very important step that shouldn’t be overlooked so you can ensure you’re not duplicating your efforts and cannibalising your old work.

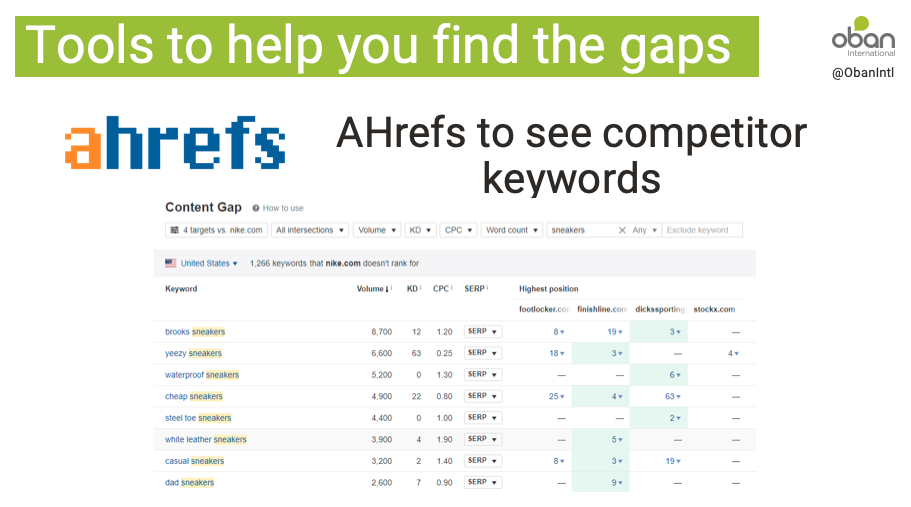

Again, tools like SEMrush can help you find content gaps by identifying competitor keyword equity. Catherine recommends limiting your analysis to the four most important competitors. Ahrefs is also useful for finding content gaps, particularly in identifying keywords that your competitors are ranking for that you aren’t.

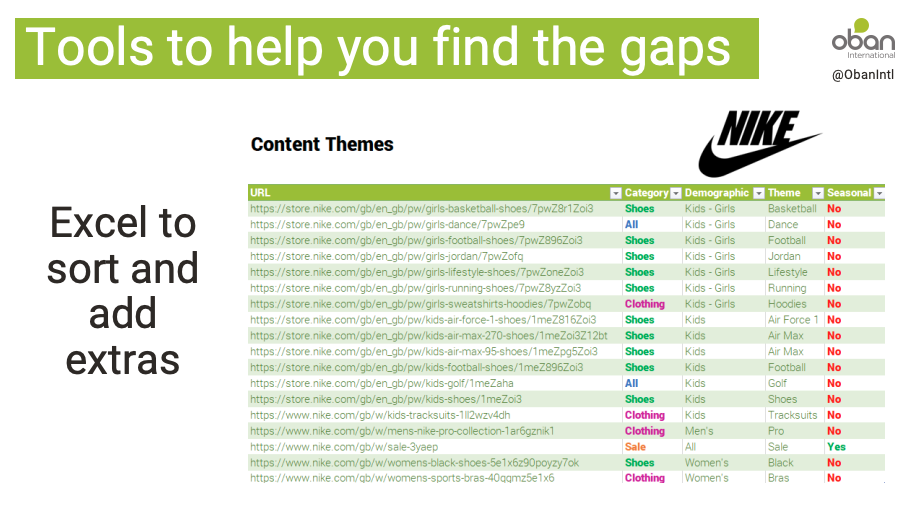

You can also identify gaps by crawling competitor’s websites, exporting a list of pages, removing unnecessary columns and then adding your own in to add value. This can help you identify different content themes and where competitors strengths and weaknesses lie.

Once you’ve done your market research, competitor research and audited your existing content you should have enough information to bring this into a content gap analysis presentation where you can share your findings.

A content gap analysis should include the following sections:

- Overview

- SEO competitor section

- Perceived competitor section

- Other recommendations

- Next steps

Taking it further

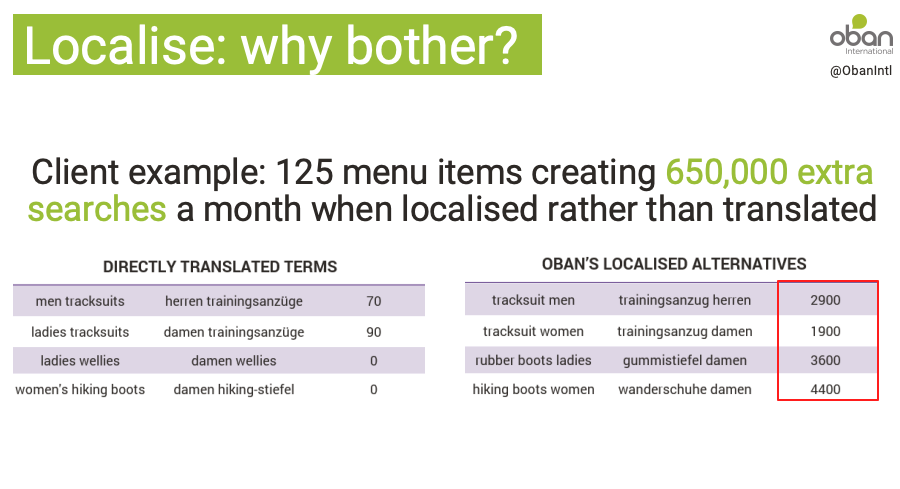

You can take this work one step further by seeking the help of local in-market experts (LIMEs) who are native speakers and understand the culture inside out. This can really benefit the relevance of your content as it will enable you to implement the use of localised keywords and benefit from increased visibility.

Catherine has seen long term benefits from organic search by implementing localised menu items rather than directly translated ones. This resulted in increased search visibility in the German market which continued over time.

Steph Whatley – How to ‘SEO’ forums, communities and UGC

Talk Summary

Steph walked us through the challenges of working with user-generated content and how this can impact SEO efforts. Steph’s talk was filled with actionable takeaways covering crawling & indexing, EAT & duplicate content.

Key Takeaways

Forums aren’t covered enough and are underrated as they can give you increased SERP visibility, more traffic and ultimately more money, especially for long-tail terms. However, it is crucially important to remember a forum (and any enterprise-level site) should use consistent and logical URL paths otherwise your life is going to be hell when it comes to managing them.

Steph has been working on some large forums (1 million indexed pages) in the lifestyle and health sectors. Unfortunately, these sites were both hit by two core algorithm updates – the medic and YMYL updates.

What are the areas that forums can fall down on?

Steph found that there were three areas that resulted in these forums taking a drop in organic performance after two updates:

- Duplication

- Crawling

- Trust

Duplication issues

Duplication was an issue on these forums because it’s hard to maintain logical URL structures when they are being generated by users. This is where it became necessary to bring in rules to help guide users when they were posting on the forum.

For example, Steph and the team brought in rules to make sure there was a minimum title length for each post. For existing threads, you manage these by auto-populating titles with body content to make it more contextual and descriptive.

UX & crawling

Forums can be hard to navigate and find things. Strong information architecture is needed, with clear section names to encourage users to help contribute to maintaining good structures on the forum.

Forums can also be difficult for search engine bots to crawl as well as for visitors. On a good day, around half of the pages on the forum Steph manages are crawled and on a bad day, only around 8% of the pages are crawled. This is a problem for enterprise sites in general.

Steph recommends conducting log file analysis using two week’s worth of data to find common sections and themes as to which pages are being crawled. You can find out more about how to tackle crawling issues on large forums in Steph’s post here.

Sitemap analysis can also help to get preferred pages crawled more regularly by search engine bots. You can split large sitemaps up into smaller individual sitemaps for different sections. This will enable you to identify sitemap issues more easily and debug them faster.

Content cruft

It’s important to deal with content cruft on a continual basis. You can do this by setting benchmarks for content freshness around metrics that encompass engagement, spam-worthiness, relevance and search volume. However, make sure not to delete any pages that have valuable backlinks.

Internal linking

Steph has found that forums tend to over-paginate by creating a new page after every few posts in a thread. There’s no need to paginate this heavily, so consider increasing this to 20-30 posts per page. Internal linking can also be improved by adding modules such as related posts and top trending.

HTML sitemaps linked from the footer can also be beneficial in uncovering deeper areas of the website for crawlers and visitors alike. Digital Spy does a great job with their HTML sitemap with a clear hierarchy.

Trust issues

Trust is important for our relationship with Google as low-quality content on some parts of your site can lower the rankings across the site as a whole. Poor quality = poor trust = poor rankings.

Three of the most important parts of how Google measures trust are likely:

- Link graph – Who we’re related to and how on the web

- Content quality – Is it high quality?

- Site hygiene – Is the site being maintained properly or is Google running into lots of technical issues and mixed signals when crawling.

A great way of maintaining quality on a forum with lots of UGC is to seek the help of moderators who:

- Act as the gatekeepers of quality and standards

- Keep posts relevant and on topic.

- Close duplicate threads.

- Ban trolls and spammers.

You can also make use of your most expert contributors on a forum by:

- Encouraging participation through gamification of contributing. Moz do this really well with the removal of the nofollow link from your profile once you achieve a level of status in the forum.

- Give them profiles which link through to other places on the internet where they appear in a credible way can help in demonstrating expertise.

- Making them moderators, as they’re likely the best people to judge quality.

- Ringfencing areas of a forum that can only be contributed to by experts on your forum.

Aleyda Solis – What makes your SEO fail… and how to fix it.

Talk Summary

In this session, Aleyda took us through the main causes of SEO processes failure – from a lack of organic search growth, expectations mismatch, delayed technical implementation, non-existing content support, to communication challenges. Aleyda’s honest talk covered the main scenarios that challenge the successful execution of the SEO process and provided actionable tips to fix them, or avoid them.

Key Takeaways

When Aleyda started working in SEO at an agency she felt they had too many clients, so it was hard to make enough of an impact. Following that, Alyeda worked in-house where there was minimal development resource and then at another in-house role where the resource was there but with no alignment as to how the business should grow. Then, working at another agency Aleyda was able to work with fewer clients but these were big organisations with a very slow execution pace.

Achieving SEO success is complex because of its nature:

- It can be complex to understand.

- Based on long term results.

- Need a flexible approach.

- There’s a high level of dependability on external factors.

- You need multidisciplinary and cross-departmental support.

With all of these experiences, Aleyda wanted to find out from the community why SEO processes fail with a survey. From 534 responses, these were the results:

The top two responses were about the availability of technical and content resources. In fact, when Aleyda started classifying the results the top three were all about budget. Looking then to the top five areas where processes go wrong, they were all about SEO execution and not analysis.

Then going to classify all of the responses, they break down into the following issues:

Budget

Client management and communications

External

Restrictions

SEO

From the survey, Aleyda concluded that if we are able to avoid the following top issues we’ll minimise the chance of SEO failure:

Aleyda then went on to share her insights on how we can avoid and overcome these issues to maximise the chance of success.

Validating there’s a real fit

We need to make sure that there is a real fit before we start working with or for a company. The lack of resources and restriction issues start when the SEO process is qualified and sold.

It is our duty to make sure the SEO expectations and goals are aligned with the business by looking at four fundamental criteria of resources, flexibility, goals and context.

You can do this by following a solutions sales process where SEOs and decision-makers are involved.

We can’t bank on ideal scenarios but we can choose “better clients”. Position yourself so you attract the right type of clients from the start where your work is likely to get results.

Facilitating execution

As SEOs, we need to facilitate the execution of our work with actionable, prioritised, incremental and validated recommendations.

Avoid recommendations with these issues:

Move towards providing recommendations which are:

SEO audits shouldn’t just be a checklist validating isolated optimisation issues, but should have analysis that takes into account context and strategy to drive the SEO process. Define issues with importance, difficulty and resources, showing how to fix each meaningful issue. Make recommendations highly actionable with screenshots so there’s no easy way not to understand or implement the solution.

You also need to establish an implementation priority based on impact and difficulty. Aleyda advised to provide recommendations iteratively and not all at once so you don’t overwhelm the client.

You can set up scheduled crawls in DeepCrawl to continuously validate the optimisation status after each release.

Minimising restrictions

Aleyda then went on to cover how restrictions can be minimised with fluid and agile communication with clients and stakeholders.

As a technical SEO, the most you can do is to influence priorities. We need to be able to communicate SEO value and win support by showing how we can help them. It can also help if you have an internal SEO champion in your client’s organisation among the decision-makers.

Aleyda recommends personalising reports based on different stakeholders and what they’re responsible for.

You can use tools like Crystal to understand how different stakeholders prefer to be communicated to.

For experienced SEO specialists, SEO is an execution problem, not a knowledge problem. It’s this reason Aleyda started her own SEO consultancy, so she could have more control over who she works with and how she works with them. You can read more about how to be successful in SEO consulting her a post on that here.

More BrightonSEO recaps this way

A massive thanks again to Kelvin, the Rough Agenda team and to everyone involved in making BrightonSEO such a roaring success. If you haven’t had enough of the talks, you can find more of the key takeaways in the first and second parts of our recap here.