At Chrome Dev Summit 2018, Googlers took to the stage to share best practice advice for developers on how to make the web better. One of the talks that caught our eye was called ‘Making Modern Web Content Discoverable for Search’ and was given by Google Developer Advocates Martin Splitt and Tom Greenaway. The talk primarily focused on how to make JavaScript-powered websites findable in search.

It was a fascinating session, but don’t worry if you missed it because we took notes for you. You can watch the full talk recording here, or you can continue reading to find out the key insights that were shared by Martin and Tom.

Key takeaways

- Skip Google’s rendering queue by using dynamic rendering.

- Detect user agents for social media crawlers and dynamically render content for them.

- Google is working on having its rendering service run alongside Chrome’s release schedule, so the renderer will always use the most up-to-date version of Chrome.

- The Rich Results Test is launching a live code-editing feature for testing changes in the browser.

- Google Search Console has launched share link functionality for issue reports.

- Use Babel and Pollyfill.io as workarounds for the limitations of the current Chrome 41 renderer.

A closer look at Google’s indexing process

The purpose of Martin and Tom’s talk was to explain:

“The best practices for ensuring a modern JavaScript-powered website is indexable by Search.”

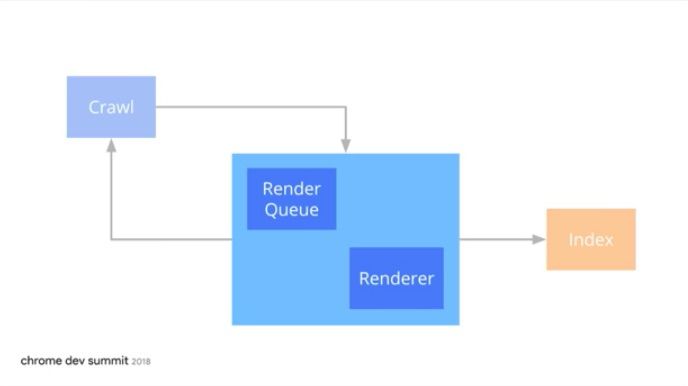

In order to get a better understanding of how to do so, we need to focus on the ‘Process’ step that sits in between ‘Crawl’ and ‘Index’ in Google’s indexing pipeline. In Martin’s words, “This is where the magic happens.”

The middle ‘Process’ step is actually split into the ‘Render Queue’ and the ‘Renderer’. As explained at Google I/O 2018, pages that require JavaScript rendering in order to extract their HTML content are queued up and rendering is deferred until Google has enough resources.

How long will pages stay in the render queue?

The time from when a page is added to Google’s render queue until the rendered page is indexed can vary. Martin and Tom explained that it could be minutes, an hour, a day or up to a week before the render is completed and the page is indexed.

They didn’t say weeks (plural) though, so it seems that the delay won’t be as long as SEOs may have initially feared!

How can we help Google render content faster?

In the meantime, as we will explain later in this post, the indexing delay for JavaScript-powered websites is inevitable. However, there is a method we can use to get around this wait time: dynamic rendering.

How dynamic rendering actually works

Dynamic rendering works by:

“Switching between client-side rendered and pre-rendered content for specific user agents.”

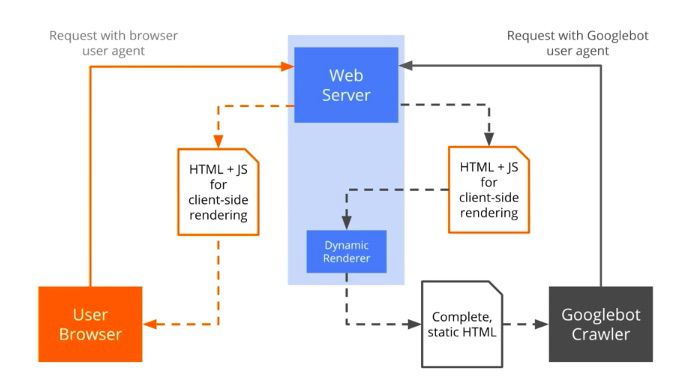

Essentially, this method of rendering allows websites to skip the rendering pipeline, in that a server-side rendered version of your usually client-side rendered website will be shown to Googlebot.

With dynamic rendering, when a browser user agent is detected, HTML and JavaScript is sent straight to the browser for client-side rendering as normal. However, when the Googlebot user agent is detected, the HTML and JavaScript is sent through a dynamic rendering service first before Googlebot receives anything.

The key to this method’s success is a mini client-side renderer, such as a headless browser like Puppeteer or Rendertron, which works as an intermediate step between the web server sending the HTML and JavaScript to Googlebot’s crawler. The dynamic renderer acts as the middleman between the web server and Googlebot and renders the page itself, so by the time Googlebot gets the page it simply sees the complete, static HTML and can index it right away.

The dynamic renderer could either be an external service or could be running on the same web server.

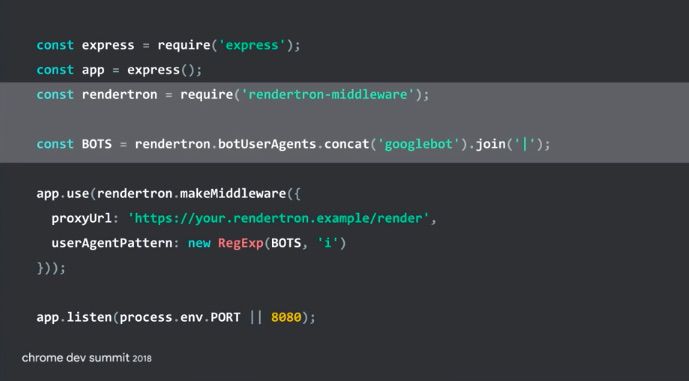

Here you can see an example of how Rendertron works to dynamically render content:

Here you can see that Rendertron works by using the command to pre-render content when the ‘googlebot’ user agent is detected.

Rendering considerations for social media crawlers

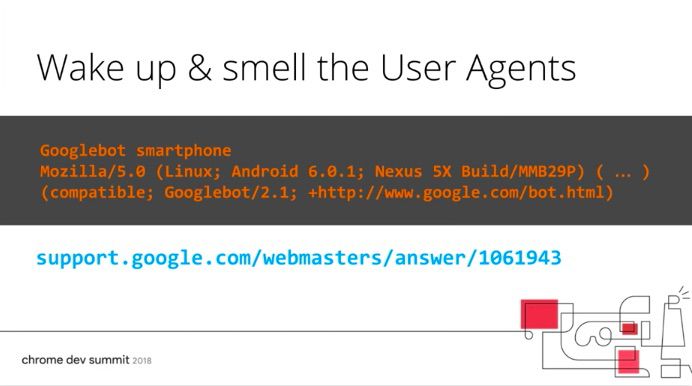

Google is getting better at client-side rendering, so dynamic rendering is an added bonus for helping Googlebot index faster rather than an essential way to get content indexed at all. On the other hand, social media crawlers can’t render JavaScript so dynamic rendering is needed for any content you want them to see that needs to be rendered client-side, as Martin explained in a recent Google Webmaster Hangout. Use a dynamic renderer to ‘sniff out’ the user agents that need some rendering help so they can actually see and index your website.

Another way to check which search engines and social media crawlers are accessing your content is to do a reverse DNS lookup to check which server the request is coming, whether it’s Google’s server, Twitter’s server etc.

Tools for analysing what Google is able to render, or not

The main Google-recommended tools for analysing and troubleshooting any rendering issues on your site are:

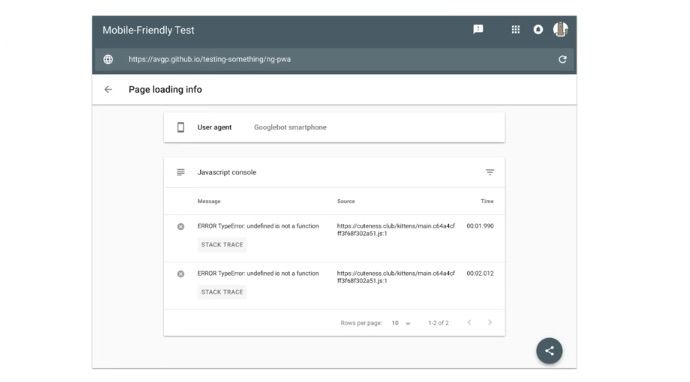

1. The Mobile-friendly Test: This gives you blocked JavaScript messages when Googlebot doesn’t render what you would expect it to. You can really dive into the details and debug JavaScript errors here.

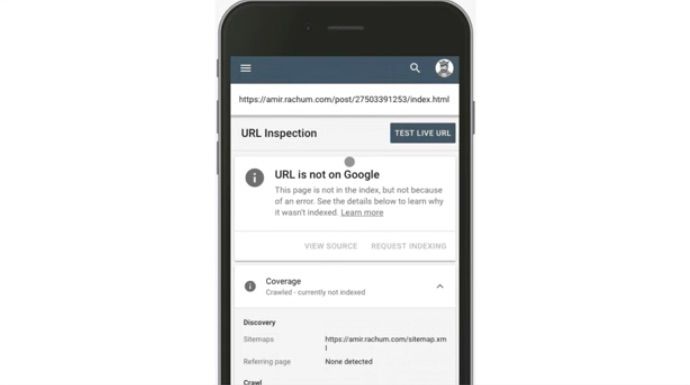

2. The URL Inspection Tool: Found in Google Search Console, you can use this tool to see what is blocking Googlebot from indexing a particular page and the reasons behind that.

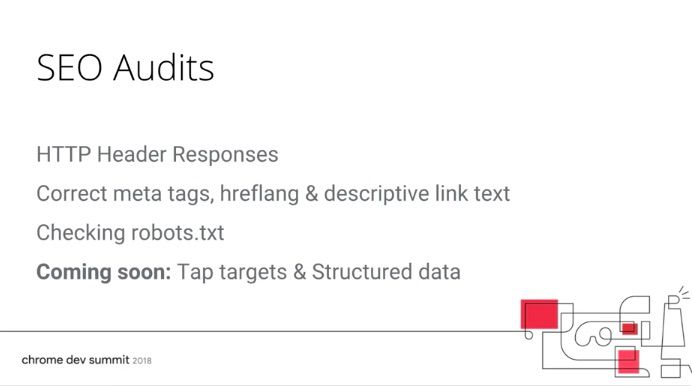

3. Lighthouse: There are existing SEO reports within Lighthouse which show whether or not elements like meta tags and hreflang were able to be found on a page, and there are more reports coming.

3. Lighthouse: There are existing SEO reports within Lighthouse which show whether or not elements like meta tags and hreflang were able to be found on a page, and there are more reports coming.

New Google Search Console feature announcement

When you see warning light emojis, you know something important is about to be shared.

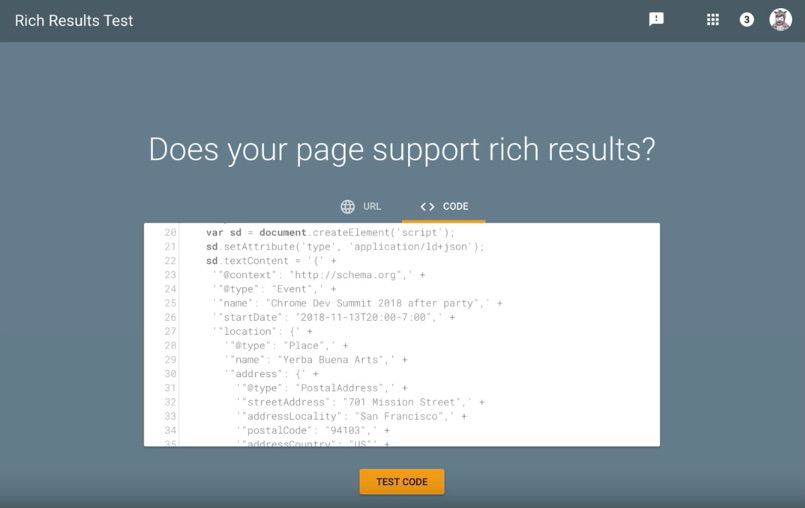

Martin announced that Google Search Console’s Rich Results Test will now support live code-editing. This means you’ll be able to write and test code live in the browser.

Another new Google Search Console feature that was announced is the use of share links. You’ll now be able to share issue reports directly with developers, so they can see exactly what the problem is and fix it without needing access to the company’s Google Search Console account.

After the developer has fixed the issue in question, you’ll now be able to press a new ‘Validate Fix’ button to make sure the developer’s changes have worked correctly. Google hopes that this update will help streamline and speed up workflows across departments, especially between SEOs and developers.

Best practice advice for serving JavaScript-powered content

Here’s some advice on the techniques, knowledge, tools, approaches, and processes you need when tackling JavaScript-powered websites, as explained by Martin and Tom:

- Use Babel to transpile ES6 code down to ES5 so Googlebot can render it. Google uses Chrome 41 for rendering which was released in 2015, so it doesn’t support some of the features of modern browsers released since then. It only supports ECMAScript 5 and doesn’t support ES6.

- Use Polyfill.io to serve any content that requires cookies, service workers or session storage to Googlebot. Chrome 41 is stateless, meaning it doesn’t have memory, isn’t able to use cookies or service workers and can’t use local or session storage. Polyfill.io sniffs out the user agent requesting a page and if it’s Googlebot it will serve what would be served for a user on a more up to date browser. It aims to give the right amount of code to make the page work.

- Use dynamic rendering for rapidly changing sites, sites with modern features and sites that have a strong social media presence. Due to roadblocks with rendering these types of websites, dynamic rendering is a workaround until Googlebot catches up with being able to render your JavaScript-powered content and modern implementations which may be beyond Chrome 41’s capabilities.

Google announces plans for updating its rendering service

Martin and Tom explained that dynamic rendering is a solution that works in the meantime, but shouldn’t have to be a long-term solution for website owners. This is why the team at Google are currently working on improving their rendering service. They don’t want Googlebot to simply catch up with the current version of Chrome, because we’ll keep having the same issue of the renderer being out of date each time a new version of Chrome is launched.

Therefore, starting soon, Google will be working on a process where the rendering service will stay up to date with Chrome. This will work by having Googlebot run alongside the Chrome release schedule so the renderer will always be up-to-date with the most recent version of Chrome.

This is exciting news and I’m sure the SEO community will all be eagerly watching and waiting for any updates on this.

Keep up-to-date with Google updates with our newsletter

If you enjoyed this post and want to read more of the latest news from Google, make sure you read our regular recaps of the Google Webmaster Hangouts on our blog, or subscribe to our newsletter where we’ll send announcements and tips from Google straight to your inbox.

Image credit: Header image and slide screenshots from Chrome Dev Summit 2018