Content is the lifeblood of the web and the search engines that organise its pages into searchable indices. In order for pages to rank prominently in organic search results, they need to satisfy users’ search queries with high quality and relevant content. In most cases, strong organic rankings can be achieved with unique text content, however, in some cases it is possible to rank well with minimal use of text.

The content on a page usually determines which topics and keywords a page is able to rank for. As such, it is important to ensure that content elements are optimised to favourably influence that page’s ranking in organic search, whilst also satisfying the intent behind a searcher’s query.

Satisfying user intent has become increasingly important for search engines in recent years and is expected to become more important moving forward as their algorithms better understand what searchers are looking for.

Correct Amounts of Content For Search Engines

It is important to understand that there is not a “correct” amount of content to include on a page in order to rank well in search. The optimal content length varies depending on a lot of factors including the topic, industry and keywords within the search query.

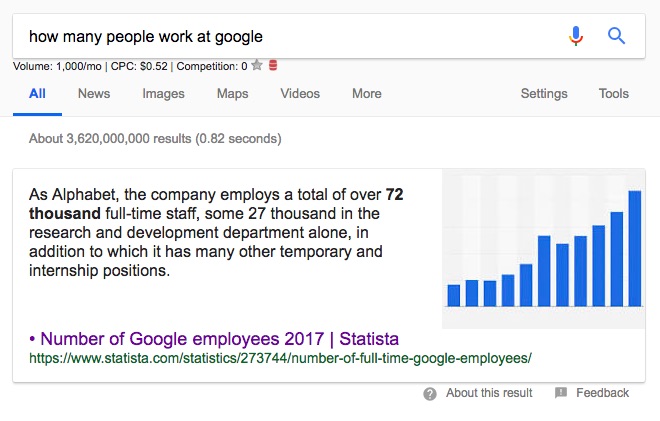

For example, an informational query like ‘how many people work at Google?’ has one definite answer which Google can extract from a page and include in its search results as a featured snippet. By looking at the source page that populates the featured snippet in the example below, you can see that content on the page is fairly minimal.

A large quantity of content is not required to satisfy the user intent behind the search query, Google will instead use signals around the authority and trustworthiness of the content and its source to determine how pages should be ranked.

Conversely, a query like ‘meaning of life’ has no one definitive, correct answer, so it is likely that user intent won’t be satisfied by a page with a relatively low quantity of content. People searching for this query are asking a philosophical question that is likely to require more content than would be needed for simple informational queries.

Despite the variation in content length of pages that rank for different query types, there is much debate about the optimal amount of unique textual content required for strong rankings. However, any unique text is usually considered to be better than none, and sometimes very little is required to differentiate a page from its competitors.

For example, on the product category pages of ecommerce sites, most competitor web pages will not have any unique text on their pages. In cases like this, even a small amount of useful, engaging, unique content can be effective in improving rankings in organic search.

Content Considerations

One big consideration regarding content is that it must be crawlable by search engine bots for it to be indexed in search. As an example, text that is included as part of an image is still deemed to be unreadable for search engines in most cases.

Another consideration is the effect of non-unique content. It is generally better to have non-unique content on a page than no content, and this is often the case for product descriptions and similar scenarios where it is impractical to provide unique content for every single page. However, SEO professionals should try to avoid using “boilerplate” text wherever possible, which refers to standard blocks of text, reused on multiple pages featuring only minimal changes to the original copy.

Finally, it is important to understand that unique content on every page will safeguard a site against potential duplicate content issues, which negatively impact the search engines’ perceived quality of a website.

Keyword Density & Semantic Relevance

The relevance of a target keyword is determined by far more than simply the number of times that keyword is features on a page. Search engines mostly base this part of their algorithms on something called “semantic relevance”, which takes into account context along with how many similar words are mentioned throughout the content.

In years gone by when search engines were less sophisticated, the number of times that a keyword was used and the percentage of text that it accounted for were deemed to be very important ranking factors. However, most search engines have now identified keyword stuffing as a potential low quality signal, so keyword densities should now be kept low to avoid potential penalties.

User Engagement Metrics

Ultimately, search engines aim to provide an exceptional user experience, which means it is advisable that websites are optimised to provide the best experience possible for users; rather than solely focusing on optimising for search engines.

Evidence of this can be seen in correlation studies, which appear to indicate a positive correlation between a site’s user engagement and its rankings in Google search results. However, it isn’t clear which way the causation goes and this doesn’t mean Google and other search engines directly use metrics like bounce rate, click-through rate and dwell time in their ranking algorithms. Specifically regarding Google’s algorithms, it has not been formally confirmed, but there is evidence from Google employees that tells us that click data does impact rankings.

Further evidence comes from Google’s patents which indicate that they collect and store information on click-through rates from their search results pages. Google patents also indicate that they potentially make use of dwell time, which is the amount of time a user spends on a page before returning to the search results.

In summary, while engagement metrics may not be directly used in search engine ranking algorithms, the fact that there is a positive correlation between good engagement metrics and prominent rankings is enough to indicate the SEO value of optimising for user experience as this is likely to help deliver improved organic search performance.

Content position

Another important consideration for on-page content is where it is positioned and how it is structured on a page.

From a user’s perspective, they want to be able to access the content they require instantly, without having to scroll excessively to find it. As a result, search engines may therefore penalise websites which do not have sufficient relevant content above-the-fold.

It is worth keeping in mind that Google tries to differentiate a page’s primary content from its boilerplate content (sitewide elements like navigation, footer etc.). Within a page’s primary content, Google doesn’t give more weight to text that is higher up on the page compared to that which is further down.

Equally, the position of links in a page’s primary content doesn’t really matter. Search engine crawlers will crawl a site to understand the context of individual pages regardless of link position, be it in the header, footer or within the main body content.

Content overlays

A slightly different type of content comes in the form of overlays, which websites typically use for lead generation such as “subscribe to newsletter” pop-ups.

How do search engines treat content overlays?

Search engines can follow content and links within a crawlable JavaScript pop-up. From a user experience point of view, sometimes these light boxes can be implemented in a way that’s intrusive e.g. when a pop-up is displayed instantly after a page is loaded, or if a pop-up forces a registration in order for content to be shown.

Google judges an interstitial to be intrusive when it makes the primary content less accessible. As such, Google has started to penalise websites for these intrusive pop-ups/interstitials on mobile devices, causing a drop in rankings for pages that include them. However, search engines will not penalise pop-ups that are not intrusive, as well as anything that is legally required to be displayed, such as cookie policy notices.

If pop-ups are used on a website, it is best practice to implement a timed delay between a page loading and the pop-up appearing (you can also set a timer for when they should disappear). It is also worth bearing in mind that pop-ups can cause increased bounce rates, meaning that search engines may begin to deem the pages that include them to be less relevant to a user’s intent and, therefore, devalue the page.

User-generated Content (UGC)

One method for keeping pages frequently updated and continuously generating fresh content is to include user-generated content (UGC). UGC can come in the form of user reviews, comments and other content submissions from visitors.

UGC might be particularly useful to include on pages when it is difficult to differentiate the content from a competitor’s page. For example, product descriptions often can’t be changed due to manufacturer restrictions, so the addition of UGC such as reviews adds value and contributes toward making those pages more unique.

While UGC may be helpful in differentiating a page from its competitors, there are a few potential barriers to consider regarding moderation and maintenance:

- All submissions will need to be vetted in order to deal with negativity, bad reviews, insults or inciteful behaviour.

- Submitting users may be using fake identities.

- The authority of user submissions can be questionable.

- Legal issues related to the publication of user-submitted content need to be thoroughly addressed.

- The technical facility for user submissions will need to be rigorously implemented to prevent malicious code injection.