Our Robots.txt Overwrite function (in Advanced Settings) allows you to crawl a site using a different version of robots.txt to the one on the primary domain.

There are two main uses for this:

- Developing live robots.txt for large and/or complicated sites

Let’s explore the benefits of overwriting your robots.txt file, the problems that these uses can solve and how to use the feature.

The benefits of robots.txt overwriting in a web crawler

See impact of changes across any site

In contrast to Google’s robots.txt Tester, using an overwrite feature in a web crawler allows you to see the impact of your changes across the whole site – either on live or staging – rather than just on one existing URL.

Find new pages that might be crawled

Since a less restrictive robots.txt file will allow search engines to discover significantly more new pages, this also means you’ll find out which new pages these are. This can only be seen with a full crawl.

See exactly which pages were affected by the change

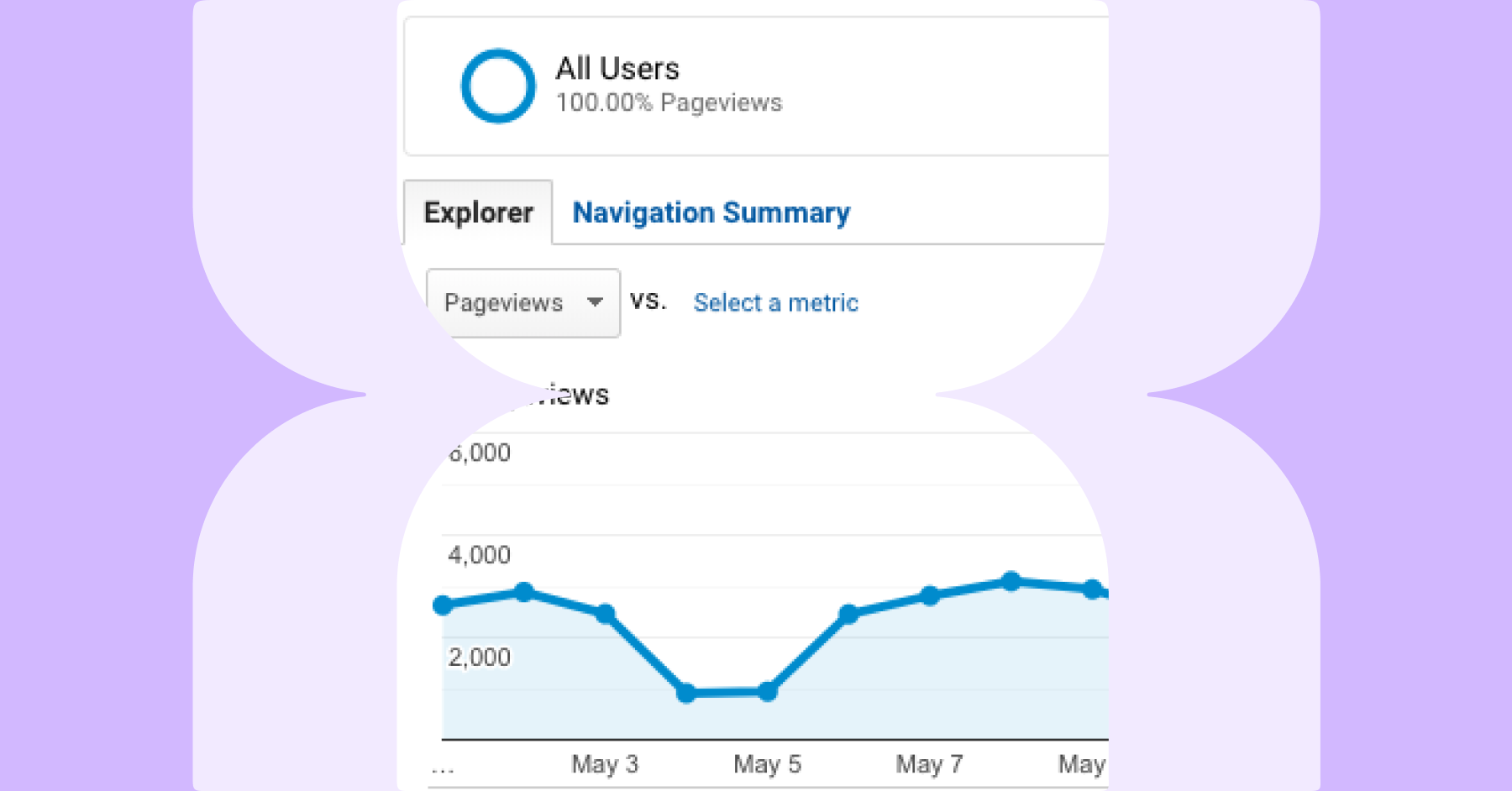

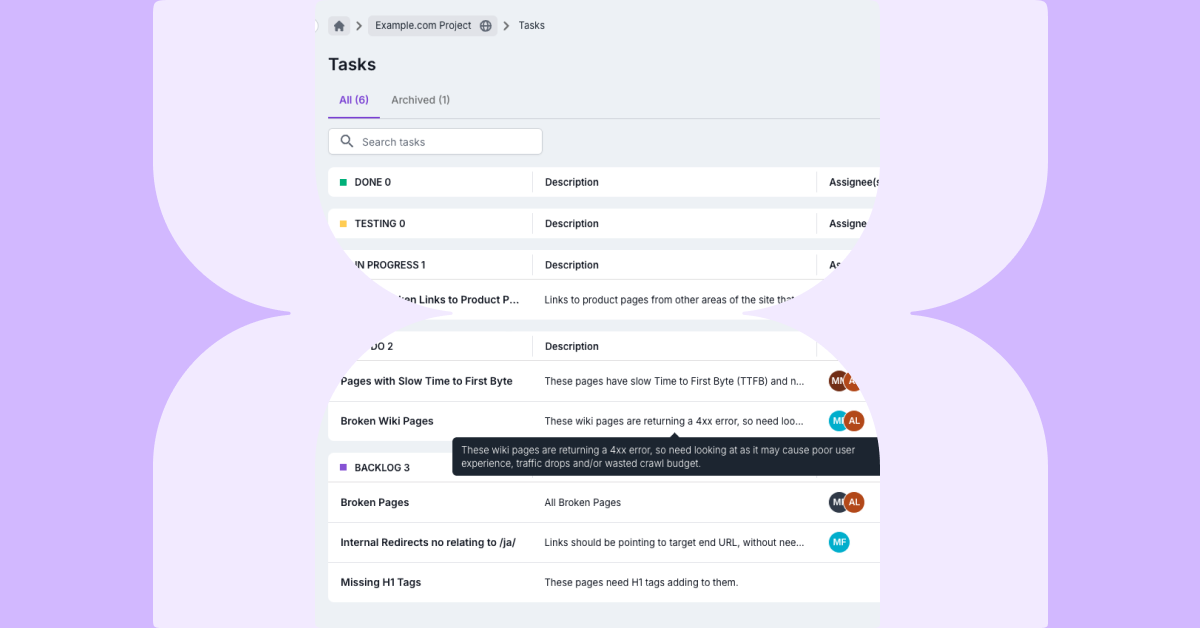

When you crawl using Lumar’s own Robots.txt Overwrite feature, your report will show exactly which URLs were affected by the changed robots.txt, making evaluation very simple.

To make your analysis even easier, you can filter the report. This makes it easier to see patterns and prioritize quick wins that wouldn’t be easy to spot among a long list of URLs, especially when there are parameters and fiddly URLs in there, too.

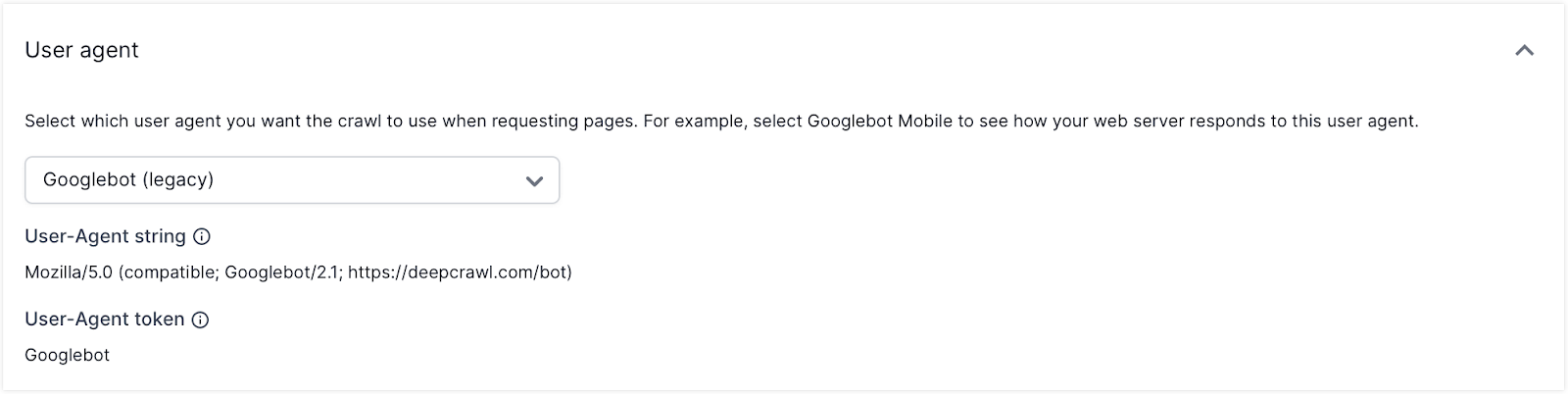

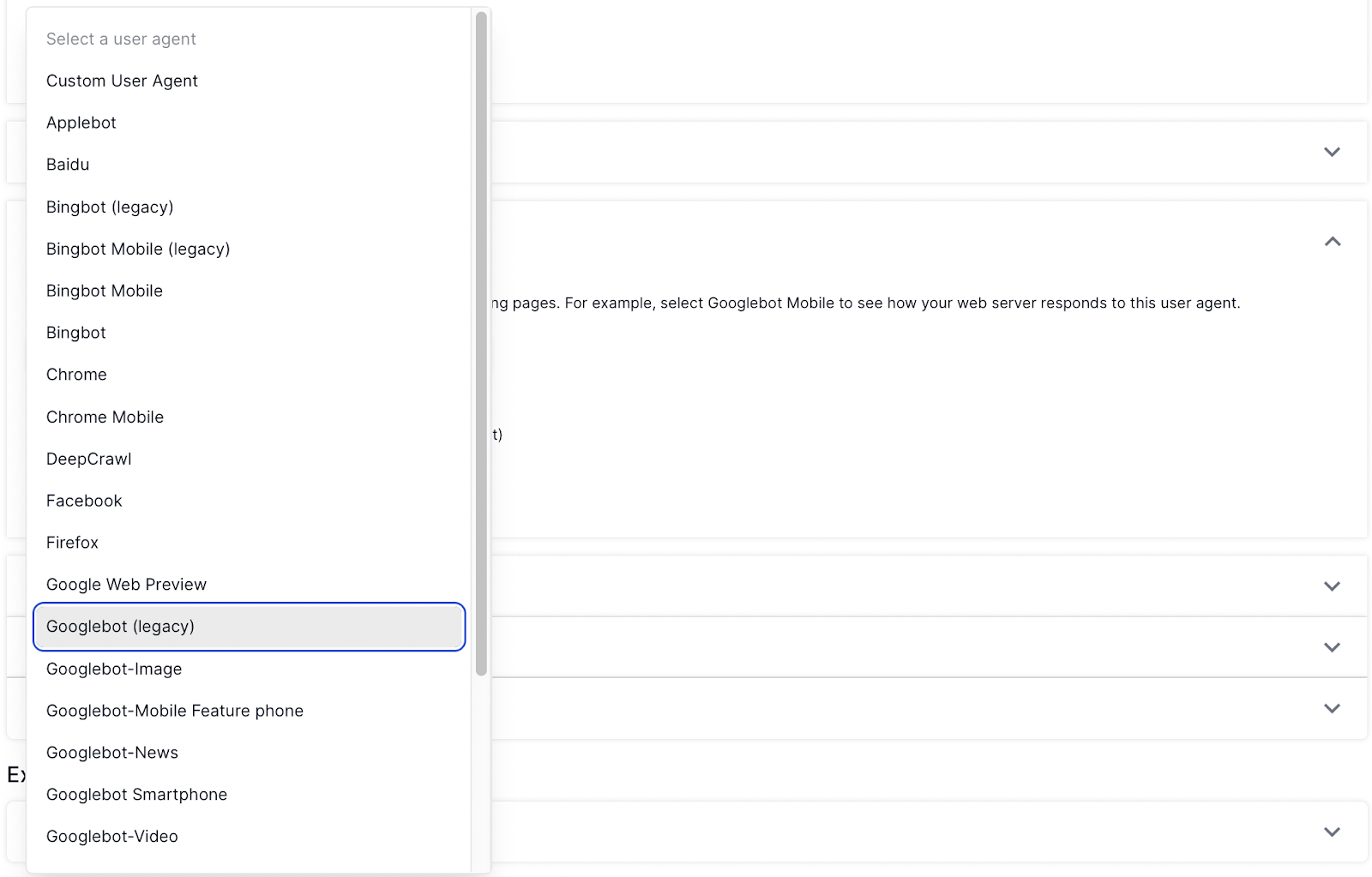

Test with different user agents

A web crawler will allow you to test the robots.txt file using different Googlebot, Bingbot and other user agents within the same tool, to see how different search engines will crawl your site:

Use case 1: Developing live robots.txt for large and/or complicated sites

The problem:

A robots.txt file requires updating periodically to accommodate changes in your site architecture, and to improve your site’s crawl efficiency. There might be existing problems that are resulting in pages being incorrectly disallowed, or a large volume of URLs that have no value but are using up a lot of crawl budget.

Making any change to a robots.txt is always a risky affair, as a single incorrect character can result in the entire site being removed from the index.

The Search Console robots.txt Tester allows you to test a limited number of URLs, but this won’t give you a full understanding of the impact of a change in robots.txt for a large or complicated website.

The solution:

Using Lumar to crawl your live site with your robots.txt changes applied means you can see the impact of your changes without affecting how your site is indexed in real search results, and fix any issues before they affect your performance.

How-to:

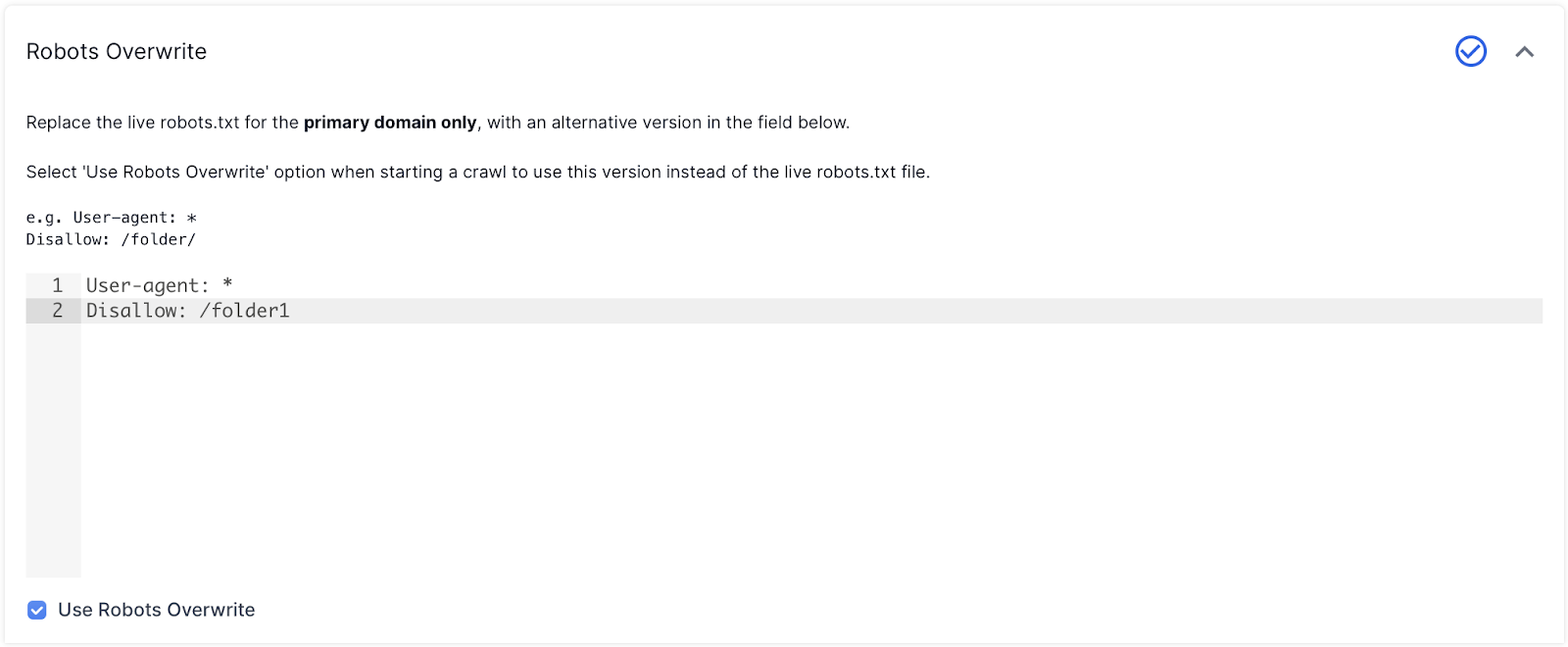

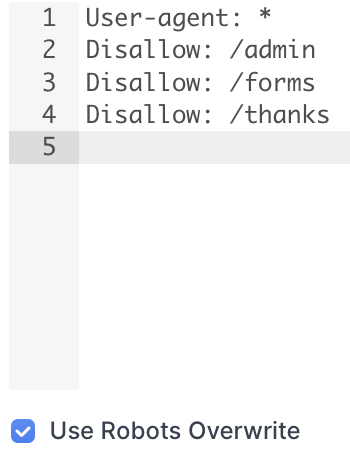

1. Add your modified robots.txt file into the Robots Overwrite field in Advanced Settings, making sure to check the ‘Use my custom robots.txt’ checkbox before you crawl:

2. You can then analyze which URLs are disallowed or allowed because of the updated robots.txt file.

When you are happy with the results, you can push the updated robots.txt live and start crawling the live robots.txt file again by unchecking the ‘Use my custom robots.txt’ before the next crawl.

Use case 2: Crawling a disallowed staging site

The problem:

Staging sites are often prevented from being indexed in search engines, with every staging URL being disallowed in the domain’s robots.txt file. However, this setup causes issues when you want to crawl the staging site to check for indexing issues: the robots.txt file on the live site will be completely different to the staging one, and therefore produce false results. The pages linked from the disallowed pages won’t be included in the crawl either.

The solution:

When crawling a staging site before putting changes live, you need a way to change the robots.txt file on the staging site to reflect the settings on the live site.

Using the robots.txt Overwrite function in Lumar, this change is really easy and you can crawl your staging site just like a live site.

How-to:

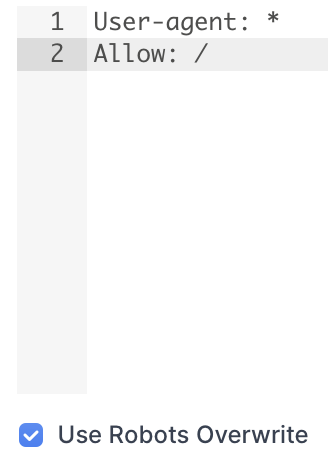

1. Copy the robots.txt from the live site, or just use a generic ‘allow all’ robots.txt, into the Robots Overwrite field in Advanced Settings. Make sure to tick the ‘Use Robots Overwrite’ checkbox:

2. Continue setting up a Universal Crawl as normal, making sure to set the user agent as the bot you want to test: