The latest session in Lumar’s ongoing SEO and digital marketing webinar series looks at programmatic SEO and explores how marketers can use technical SEO improvements to boost indexability across their website — even at enterprise scale.

We were pleased to welcome Anna Uss, SEO team lead at Snyk, a developer-focused web security tool. She has a wealth of experience in implementing SEO at scale and a particular interest in how programmatic content can be used to bolster digital marketing campaigns.

Read on for our key takeaways from the presentation, or watch the full video (including best practice examples, poll results, and Q&A session) above.

Before we get started…

We’d love for you to participate in our next industry survey.

Whether you’re an SEO, a digital marketer, a developer, UX designer, content writer, or any other type of website professional — we’re eager to hear your insights!

Results will be shared in March so, after you participate, keep an eye on the Lumar blog for updates.

Fill out the 5-min survey now

Webinar Recap: SEO Indexability Case Study

What is programmatic SEO?

Taking a programmatic SEO approach helped the team at Snyk develop content for millions of pages on their website. For Uss, programmatic SEO boils down to the strategy of publishing unique, high-quality pages at scale using templates and databases. The goal is to drive traffic through thousands, or even millions, of pages.

“In order to try programmatic you need to have dev resources,” she says. “More importantly, you need to have a good idea and a good strategy.”

Uss also points out that there is an assumption among marketers that programmatic SEO pages are low quality. But it doesn’t have to be this way.

“You need to make sure that you identify a need and you’re adding unique value with your pages,” she says. “Obviously you’re going to take some information from the web, you’re going to take some information from databases. But the way you compile it, the way you serve it to the user, and the additional benefit you put on top of it needs to solve a problem.”

Ultimately for Uss, a programmatic SEO approach needs to be quality-first. She mentions that the website content developed through these programmatic methods was not mass-produced AI-generated content created by ChatGPT or a similar AI tool— instead, their programmatic approach uses templates and databases to help scale content and indexability efforts while still keeping content quality and usefulness top-of-mind.

4 challenges of programmatic SEO

Programmatic SEO comes with its own set of challenges. Uss breaks down programmatic SEO challenges to watch our for into four core areas:

1. Crawlability

“When I talk about crawlability, I mean structure,” she says. “It is critical for you to think ahead about how you will structure the asset in a meaningful way that makes sense to the user and to Googlebot.”

2. Thin Content

There is an inherent limit to content that is built programmatically, according to Uss. Google might not deem certain pages to be worth indexing, especially if the URLs contain thin content (that is, not enough content on a page, or content that doesn’t meet a user’s search intent).

3. Duplicate Content

Duplicate content can also cause SEO issues. This refers to content that appears on multiple pages on your site. The risk here is that search engine algorithms will want to know which version of this content is the ‘true source’ they should index as the representative page for this content. (Note: canonical tags can help with duplicate content issues.)

4. Indexing & Crawling Budget

“Even though you might have 100k ‘most important’ pages, you still have two million pages and you want all of them indexed,” Uss says. “How do you do that without wasting your crawling budget? And how do you convince Google that all of those pages are valid?”

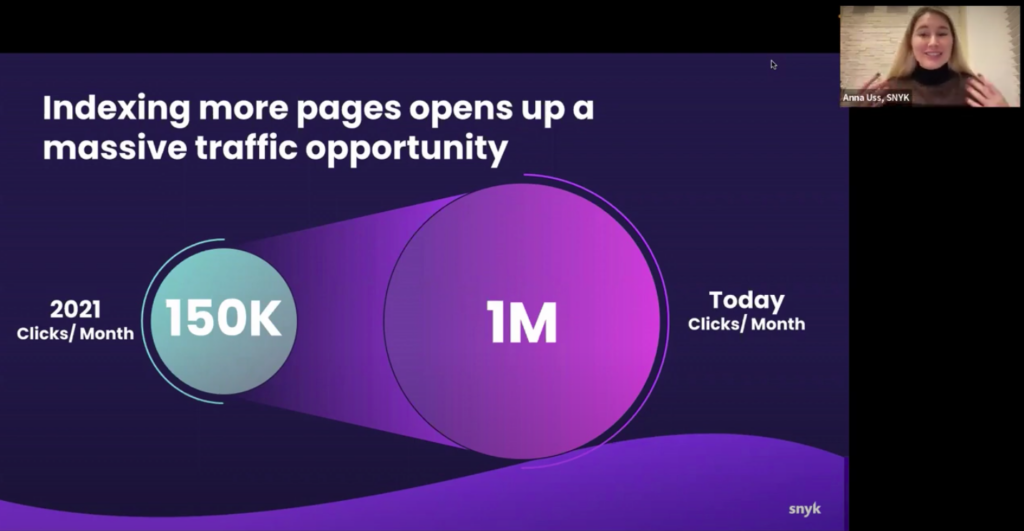

Indexing more pages opens up a massive traffic opportunity

If a site has two million pages with only 100,000 pages indexed, there are 1.9 million that are not indexed — that’s leaving a lot of opportunity for website traffic on the table.

“Imagine if each one of those pages would bring you at least one click [a month],” she says. “It means you are sitting on 1.9 million clicks a month that you are not seeing at all.”

So, how do you unlock that potential?

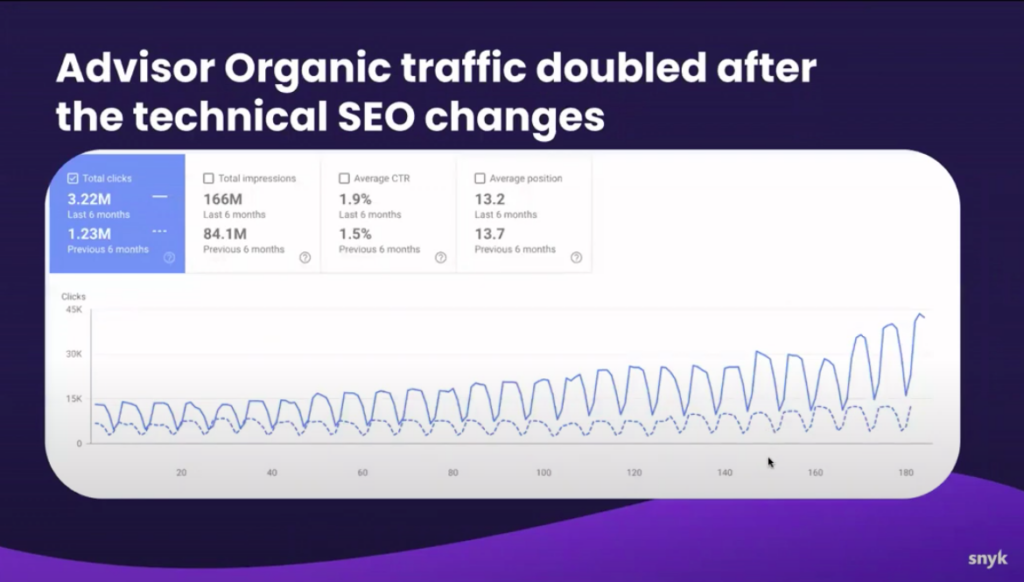

Uss refers to work she has done to index more pages on the Snyk website, where organic clicks have more than doubled in the past six months.

She also notes how the average click-through-rate (CTR) has increased and the average position has stayed the same. This means that the newly indexed pages are of an equal quality to those from the site which were being crawled beforehand.

So what changes did Uss and Snyk make to achieve this?

First, Snyk created directory pages to make their content assets more crawlable. Uss stresses the importance of crawlability when it comes to indexing more pages.

Next, they set about improving their internal linking on the pages.

“If you have a very large-scale website, think in terms of patterns,” Uss advises. “Think how you can link pages together. What are the criteria that groups them?”

Snyk also linked to these directory pages from each package page on their site.

How to measure the impact of technical SEO changes

For Uss, it’s really important to be able to draw conclusions about your SEO changes quickly, in order to discern whether or not you’re moving in the right direction. She splits measurement practices into two categories: internal stack and external (such as Google Search Console etc.).

Uss describes how Snyk incorporated Lumar’s website intelligence and technical SEO platform into their internal tech stack.

“We knew that we had a problem because we only had 400k pages indexed and we had two million pages [on the site],” she says. “We knew we were sitting on a huge opportunity, there, but we didn’t know why Google was not indexing [the URLs].”

Snyk carried out an initial website crawl with Lumar and cross-referenced this with Google Search Console. As expected, both were only able to crawl around a third of the full number of pages on the site.

After implementing the changes as detailed above, Snyk then crawled the site with Lumar again. Crawling internally at this stage is useful because it gives the team an opportunity to report on progress and advocate for ongoing investment in technical SEO, as well as being able to identify whether changes are positive before they are crawled again by Google.

Then comes external measurement using tools like Google Search Console. As Uss highlights, it is not as immediate as what can be seen internally with tools such as Lumar, but it is useful to get a comprehensive view.

She points to how Google Search Console can show you organic traffic performance, as well as provide an indexing report. She also notes how crucial this is for your updated sitemaps. “Never ignore it,” she says. “Upload fresh files and track how Google is indexing them.”

Key takeaways on indexability & programmatic SEO

Programmatic SEO shouldn’t be about creating a flood of low-quality pages that are automatically generated simply to game Google. As Uss highlights, all content created for your website should be valuable and helpful to human web users.

SEOs and digital marketing teams need to be savvy with how they structure programmatic content and how it is categorized to appeal to both users and search engine bots. They also need to be careful that they aren’t producing thin or duplicate content, and be prepared to monitor their efforts and produce reports that will help them get buy-in and resources for SEO projects within their organization.

That being said, programmatic SEO has the potential to really release the true value of the content on your website. Snyk managed to double their organic clicks in just six months by ensuring that around a million more of their pages were being indexed.

Having a decent measurement strategy in place is vital. An internal tech stack using technical SEO tools such as Lumar for more immediate monitoring, paired with external search engine tools like Google Search Console, can ensure that any changes made to your content and site architecture are taking your indexability efforts in the right direction.