What better way to start off the new year of our monthly webinar series than with a brilliant session on the ins and outs of internal linking with Kevin Indig, Mentor for Growth at German Accelerator, and Jon Myers, Strategy Consultant & CAB Chairman at DeepCrawl.

We don’t want anyone to miss out on any of the internal linking wisdom that was shared during this session, so we recorded the webinar and took notes of all of the key points for you.

You can watch the full recording of the webinar here:

Here are the slides that Kevin presented:

The DeepCrawl team would like to say a special thank you to Kevin for coming along and delivering a really insightful and actionable presentation. We’d also like to thank Jon Myers for hosting, and everyone who joined the webinar live and posed Kevin with interesting questions.

Great webinar! Thanks, @Kevin_Indig! https://t.co/EARSSMcfJj

— Nathan Ralph (@thenateralph) January 29, 2019

The importance of internal linking

SEO is getting harder as the industry and search engines evolve, so we have to shift our priorities to be able to make a positive impact on the sites that we manage. In Kevin’s personal opinion, the top four factors that we should be focusing on in order of importance are:

- Content

- Internal linking

- User experience

- On-page

There is often a big focus on external linking and how to build a website and brand that other sites will want to link to, however, internal linking is just as important and should have just as much of a focus.

Kevin let us in on some tried-and-tested internal linking methods that caused a 160% increase in organic traffic for a website that he worked on at Atlassian. Let’s have a look at his process in more detail.

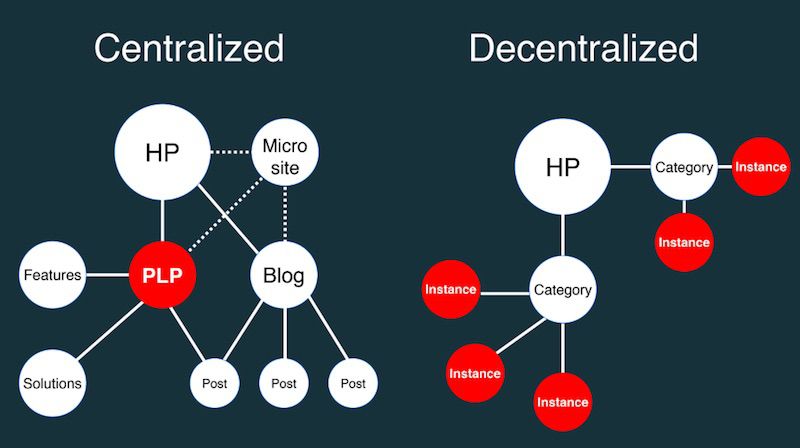

Centralized vs decentralized internal linking

There are two main ways of structuring a website’s internal linking which both have their own fundamentally different strategies for passing PageRank:

- Centralized linking

- Decentralized linking

Centralized linking

A website’s internal linking funnels equity to a main product landing page where a customer would convert. PageRank from across the site is concentrated towards a particular page, and the user flow follows the same path.

This method suits SaaS websites, apps and enterprise websites. A centralized linking strategy would work well for a website like Atlassian that focuses on a few key product pages.

Decentralized linking

A website’s internal linking passes equity across a few different products or instances where a customer would convert. PageRank is spread out across the website, and the user flow is detached from this path.

This method suits ecommerce sites, social networks and marketplace websites. A decentralized linking strategy would work well for a website like Pinterest where users can sign up on any pin page.

CheiRank & PageRank

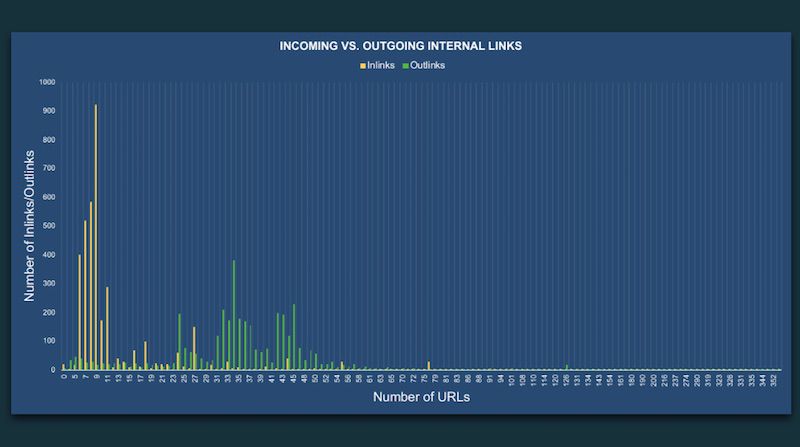

It’s important to analyse the strength of the pages on your website by looking at the total incoming links vs outgoing links and any potential imbalance between them. This is where CheiRank and PageRank.

CheiRank can be understood as an inverse PageRank. Where PageRank is based on the incoming link equity received from other pages, CheiRank is based on the link equity that is given away through a page’s outgoing links.

Working out the balance between PageRank and CheiRank gives a good insight into the strength of the pages across your website. Kevin then went into more detail on how you can go about calculating page strength.

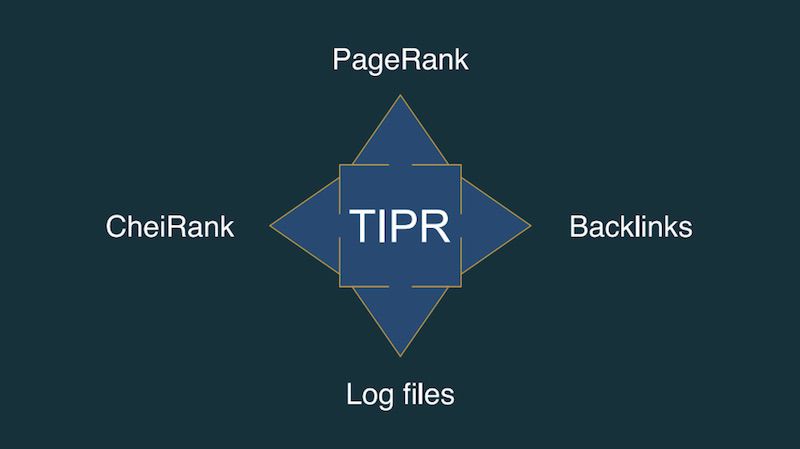

The TIPR model & calculating PageRank

Calculating internal PageRank is a good starting point for figuring out the strength and weighting of your different pages. DeepCrawl calculates this for you with each crawl with the metric we call DeepRank, which is a measurement of internal link weight that is based on Google’s PageRank algorithm.

You can take this model one step further by combining external sources to get the full picture of page strength. Those additional sources include backlinks which provide external PageRank insights, as well as log files which provide Googlebot crawling data and perceived page importance.

The TIPR model is made up of:

- PageRank

- CheiRank

- Backlinks

- Logfiles

Here’s Kevin’s process for incorporating the TIPR model into his internal linking optimization strategy:

- Crawl the website using a tool like DeepCrawl.

- Calculate internal PageRank and CheiRank.

- Add backlink data to get true internal PageRank (you can use any tool of choice for this, just make sure you use one tool consistently.)

- Add crawl rate data from log files to show the impact of internal and external linking changes over time and how they affect Google.

- Sort and rank these different metrics.

- Optimize your internal linking to get the right balance.

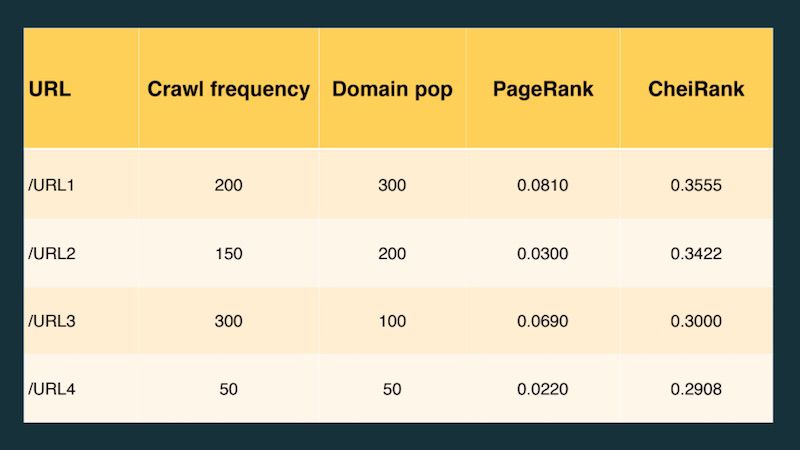

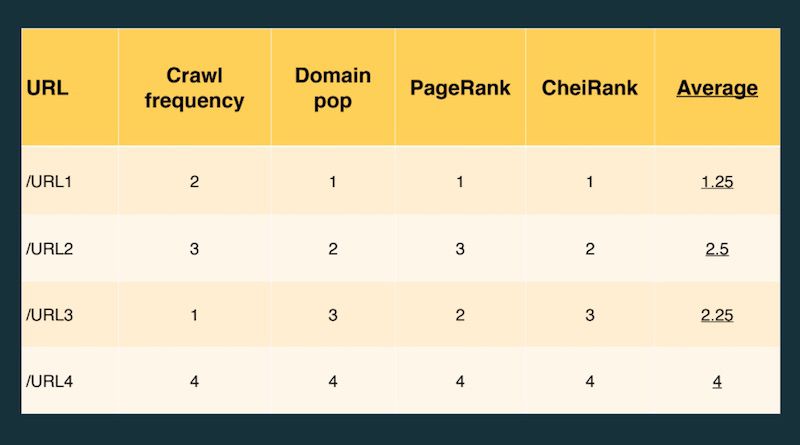

Here’s an example of the data you might get from pulling in the different metrics from the TIPR model:

You then need to rank each URL for each for each metric, so /URL3 is ranked 1 for crawl frequency because it was higher than the others. Once you’ve ranked every URL for every metric, you can calculate an overall average for each URL. In this example, /URL1 had the highest overall page strength:

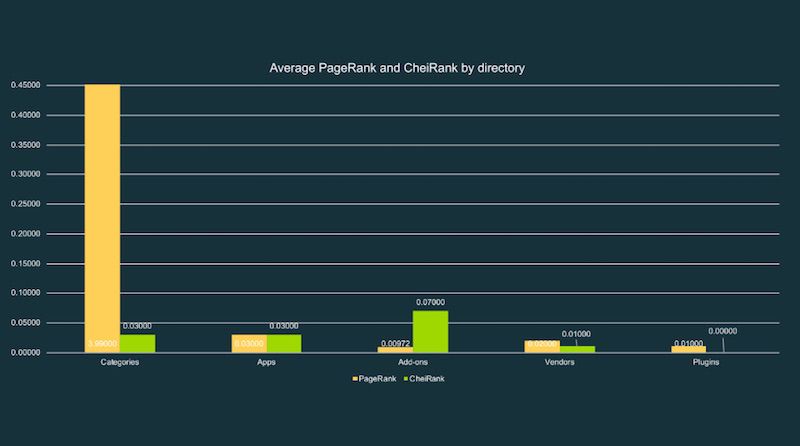

You can then look for patterns in this list and put this data into charts or graphs to more impactfully visualize linking imbalances, and even segment the data by page category or directory to easily identify problem areas.

Using log files to monitor internal linking changes

The final stage of this optimization process is to monitor any internal linking and PageRank changes with log files.

Many SEOs argue that PageRank is the strongest factor for determining Googlebot crawl rate. This is why it’s crucial to monitor your log file data regularly to see if changes to your internal linking to see if they have been successful in improving crawl rates or not.

How can you use log files for page rank monitoring? @Kevin_Indig says crawl rate correlates highly with PageRank (it’s not the only factor, but it’s a strong one!)

— DeepCrawl (@DeepCrawl) January 29, 2019

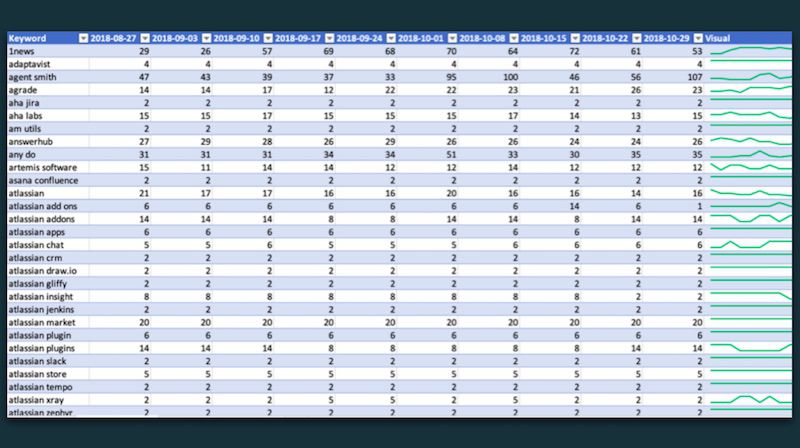

You can overlay the crawl frequency of particular pages, the keywords those pages are targeting, as well as context such as any changes made to the content or internal linking of those pages. This is really useful for attributing SEO changes to impact on Googlebot crawling.

Kevin’s learnings

Here are some of the things Kevin learned from using these methods himself:

- The robots.txt file is the most crawled URL by far.

- Googlebot spends the most time on XML sitemap URLs.

- When 404 errors spiked, the overall crawl frequency of the site decreased dramatically. Errors definitely have an impact on site performance, so implement a solution like 410 if those pages really are gone.

.@Kevin_Indig suggests following these 5 steps to optimize your internal linking:

1. Crawl

2. Calculate PR + CR

3. Add backlinks

4. Optimize your site accordingly

5. Use log files to monitor the changes pic.twitter.com/qtUDQoFfEI— DeepCrawl (@DeepCrawl) January 29, 2019

As Kevin mentioned, DeepCrawl is his tool of choice when performing internal linking analysis. Not only does DeepCrawl calculate internal PageRank, but it also supports manual uploads of backlinks and log file data, provides free Majestic backlink metrics, and also integrates with log file tools like Splunk and Logz.io.

Get the full picture of page strength all in one place by signing up for a Lumar account today. If you’re interested in learning more, then please get in touch with our team.

Find Out Kevin’s Answers in the Q&A

The audience submitted so many questions that Kevin wasn’t able to answer them all live during the webinar. Don’t worry if your question wasn’t answered though, we sent the rest to Kevin to answer and we’ve published his answers in this Q&A post.

Join our next webinar on edge SEO with Dan Taylor

For our next webinar, we’re going to be joined by the winner of the TechSEO Boost Call to Research award, Dan Taylor from SALT.agency. Dan will be talking about the advancements in Edge SEO and explaining how he gets around technical barriers like platform limitations to implement SEO fixes. We’re very excited about this one, so make sure you register your place and join us!